728x90

반응형

import tensorflow as tf

#tensorflow_hub import 수행.

import tensorflow_hub as hub

import matplotlib.pyplot as plt

print(tf.__version__)

# 2.7.0

!nvidia-smi

Sat Dec 25 03:17:11 2021

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 495.44 Driver Version: 460.32.03 CUDA Version: 11.2 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 Tesla K80 Off | 00000000:00:04.0 Off | 0 |

| N/A 71C P8 34W / 149W | 0MiB / 11441MiB | 0% Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

입력 이미지로 사용될 이미지 다운로드

!mkdir /content/data

!wget -O ./data/beatles01.jpg https://raw.githubusercontent.com/chulminkw/DLCV/master/data/image/beatles01.jpg

TF Hub에서 EfficientDet d0 Inference 모델 다운로드 후 Inference 수행.

- 원하는 모델명은 TF Hub에서 검색해서 hub.lod()로 다운로드 후 tensorflow로 사용 가능할 수 있도록 로딩됨

- EfficientDet Tensorflow Object Detection API로 구현된 모델로 Download

- 로딩된 모델은 바로 원본 이미지로 Object Detection이 가능. 입력 값으로 numpy array, tensor 모두 가능하며 uint8로 구성 필요.

module_handle = "https://tfhub.dev/tensorflow/efficientdet/d0/1"

detector_model = hub.load(module_handle)

WARNING:absl:Importing a function (__inference___call___32344) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_97451) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_bifpn_layer_call_and_return_conditional_losses_77595) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_103456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_93843) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_107064) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_bifpn_layer_call_and_return_conditional_losses_75975) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

import cv2

import time

import numpy as np

img_array_np = cv2.imread('/content/data/beatles01.jpg')

img_array = img_array_np[np.newaxis, ...]

print(img_array_np.shape, img_array.shape)

start_time = time.time()

# image를 detector_model에 인자로 입력하여 inference 수행.

result = detector_model(img_array)

print('elapsed time:', time.time()-start_time)

# (633, 806, 3) (1, 633, 806, 3)

# elapsed time: 13.12512469291687

img_tensor = tf.convert_to_tensor(img_array_np, dtype=tf.uint8)[tf.newaxis, ...]

start_time = time.time()

# image를 detector_model에 인자로 입력하여 inference 수행.

result = detector_model(img_tensor)

print('elapsed time:', time.time()-start_time)

# elapsed time: 0.3127138614654541

# image를 numpy가 아니라 tensor로 로딩

def load_img(path):

img = tf.io.read_file(path)

#png 파일일 경우 decode_png()호출

img = tf.image.decode_jpeg(img, channels=3)

print(img.shape, type(img))

return img

import time

# image를 tensor형태로 로딩.

img = load_img('/content/data/beatles01.jpg')

# 3차원 image tensor를 4차원 tensor로 변환.

# Efficientdet d0의 경우에는 입력 image를 unit8로 적용 필요.

converted_img = tf.image.convert_image_dtype(img, tf.uint8)[tf.newaxis, ...]

start_time = time.time()

# image를 detector_model에 인자로 입력하여 inference 수행.

result = detector_model(converted_img)

print('elapsed time:', time.time()-start_time)

# (633, 806, 3) <class 'tensorflow.python.framework.ops.EagerTensor'>

# elapsed time: 0.3045518398284912

inference 수행 반환값 확인

- inference 결과는 dictionary 형태로 반환되며, 개별 key값은 Object Detection 모델에 따라 달라질 수 있음. 개별 value는 tensor로 되어 있음.

- inference 반환된 bbox 좌표는 이미지 사이즈 대비 스케일링 된 0~1 사이 값이며 ymin, xmin, ymax, xmax 형태로 반환되므로 반드시 주의 필요

# inference 결과 출력. dictionary 형태의 출력 결과. dict내부의 key는 model 별로 서로 다름. 출력하여 key값 확인 필요.

print(result)

{'detection_classes': <tf.Tensor: shape=(1, 100), dtype=float32, numpy=

array([[ 1., 1., 1., 1., 3., 3., 8., 41., 3., 3., 3., 3., 3.,

41., 8., 3., 3., 3., 3., 31., 31., 3., 3., 3., 3., 3.,

3., 3., 31., 3., 3., 3., 3., 1., 3., 10., 3., 3., 3.,

31., 15., 32., 10., 3., 10., 1., 10., 3., 3., 3., 31., 1.,

27., 10., 33., 8., 3., 1., 31., 10., 3., 8., 3., 1., 3.,

1., 3., 3., 3., 3., 3., 32., 3., 1., 1., 31., 3., 31.,

1., 3., 31., 10., 8., 3., 3., 32., 1., 1., 3., 10., 41.,

31., 1., 31., 10., 3., 3., 32., 31., 3.]], dtype=float32)>, 'raw_detection_scores': <tf.Tensor: shape=(1, 49104, 90), dtype=float32, numpy=

array([[[1.4354929e-01, 3.5354502e-02, 1.3373634e-01, ...,

3.8939363e-03, 4.5267404e-03, 7.4508404e-03],

[5.8584111e-03, 1.5053916e-03, 5.7255975e-03, ...,

2.8605445e-04, 7.9238508e-04, 5.8379129e-04],

[3.8943693e-04, 1.2312026e-04, 2.9878854e-04, ...,

1.8558223e-05, 2.8921681e-04, 6.1487677e-05],

...,

[1.9985980e-03, 9.0183894e-04, 1.1327897e-03, ...,

6.9412554e-04, 9.2282181e-04, 1.1239841e-03],

[2.4827726e-03, 1.0236464e-03, 1.1463074e-03, ...,

8.3448330e-04, 9.3340850e-04, 9.7718462e-04],

[3.6730708e-03, 1.0787870e-03, 1.3733003e-03, ...,

1.0809251e-03, 9.1082731e-04, 1.2321979e-03]]], dtype=float32)>, 'raw_detection_boxes': <tf.Tensor: shape=(1, 49104, 4), dtype=float32, numpy=

array([[[ 2.9779205e-01, 9.7853315e-01, 4.0974966e-01, 9.7853315e-01],

[ 4.0937748e-02, 7.5187005e-02, 6.7204766e-02, 7.7955268e-02],

[-1.1543997e-02, 1.3033487e-04, 7.8647465e-02, 5.4135054e-02],

...,

[ 3.0034673e-01, 5.5941677e-01, 1.4586637e+00, 1.1626569e+00],

[ 1.6176426e-01, 4.5902258e-01, 1.6337620e+00, 1.2052057e+00],

[-1.5788192e-01, 2.7244717e-01, 1.8246810e+00, 1.3288105e+00]]],

dtype=float32)>, 'detection_anchor_indices': <tf.Tensor: shape=(1, 100), dtype=float32, numpy=

array([[47257., 47320., 47347., 47284., 40095., 12485., 11912., 23426.,

11178., 10677., 11280., 11871., 10632., 22826., 12800., 10686.,

10623., 10056., 10047., 22085., 18745., 9498., 10074., 10065.,

11271., 11880., 9489., 10101., 41415., 11262., 10614., 10668.,

10083., 13020., 10092., 9462., 10038., 9480., 10641., 18304.,

42941., 16369., 9498., 10695., 9453., 10569., 9489., 9471.,

9462., 12875., 41128., 11166., 42426., 9471., 42426., 11178.,

11145., 9462., 18764., 9480., 10569., 11295., 10605., 47297.,

10110., 11157., 10704., 10119., 10683., 10713., 10659., 15783.,

12888., 13029., 10011., 41417., 10128., 22060., 9453., 9507.,

41352., 8877., 11880., 11856., 10650., 17529., 11958., 10047.,

12330., 7824., 42823., 41697., 11967., 17016., 8886., 12327.,

9453., 17520., 22051., 10578.]], dtype=float32)>, 'detection_scores': <tf.Tensor: shape=(1, 100), dtype=float32, numpy=

array([[0.94711405, 0.935974 , 0.930035 , 0.89913994, 0.625541 ,

0.48422325, 0.3482024 , 0.31519073, 0.31252164, 0.3096477 ,

0.2892261 , 0.26785725, 0.26200417, 0.2544667 , 0.24920423,

0.24709295, 0.22555462, 0.22262326, 0.20144816, 0.1989274 ,

0.19818683, 0.19184774, 0.18933022, 0.18806441, 0.17253922,

0.16980891, 0.16840717, 0.16792467, 0.16733843, 0.16649997,

0.16592245, 0.16406913, 0.15594032, 0.14497948, 0.144825 ,

0.14451697, 0.14210103, 0.13976108, 0.13904284, 0.1389742 ,

0.13756527, 0.13691278, 0.13502952, 0.13211057, 0.13042402,

0.12915237, 0.12603028, 0.124609 , 0.12447888, 0.12250288,

0.12192409, 0.12113374, 0.12100718, 0.11963245, 0.11917206,

0.11773309, 0.11646152, 0.11581548, 0.11349872, 0.11340918,

0.11095154, 0.1098927 , 0.10858452, 0.10815845, 0.10694698,

0.10670073, 0.10592487, 0.1055002 , 0.10536031, 0.1041959 ,

0.10418969, 0.10327245, 0.10291874, 0.10186002, 0.1013522 ,

0.10121182, 0.10055887, 0.09975425, 0.09954076, 0.09945919,

0.09853141, 0.09829449, 0.09807272, 0.09666188, 0.09628956,

0.09607641, 0.09603674, 0.09379527, 0.09334304, 0.09319282,

0.09244616, 0.09126819, 0.09113833, 0.09095921, 0.09013715,

0.08883683, 0.08855603, 0.08836359, 0.0877557 , 0.08759023]],

dtype=float32)>, 'detection_multiclass_scores': <tf.Tensor: shape=(1, 100, 90), dtype=float32, numpy=

array([[[9.4711405e-01, 8.6817035e-04, 3.0984138e-03, ...,

4.7520236e-03, 5.2246830e-04, 4.5701285e-04],

[9.3597400e-01, 6.4510002e-04, 2.2451645e-03, ...,

3.9743381e-03, 8.1474200e-04, 5.4064585e-04],

[9.3003500e-01, 1.2423380e-03, 4.1281143e-03, ...,

4.1296966e-03, 1.3249756e-03, 8.3464832e-04],

...,

[1.8119732e-02, 5.0535421e-03, 1.0724876e-03, ...,

1.3032763e-02, 8.9723011e-03, 1.0205678e-02],

[1.1448574e-02, 1.6255280e-02, 1.1670285e-02, ...,

1.7721199e-03, 5.5407584e-03, 2.2403533e-03],

[8.4233090e-02, 1.9779259e-02, 8.7590225e-02, ...,

9.6069882e-04, 1.3168838e-03, 1.2544082e-03]]], dtype=float32)>, 'num_detections': <tf.Tensor: shape=(1,), dtype=float32, numpy=array([100.], dtype=float32)>, 'detection_boxes': <tf.Tensor: shape=(1, 100, 4), dtype=float32, numpy=

array([[[4.1179040e-01, 6.3390382e-02, 8.8111836e-01, 2.6741692e-01],

[4.3304449e-01, 4.7706339e-01, 8.9250046e-01, 6.8628871e-01],

[4.1960636e-01, 6.8270773e-01, 8.9688581e-01, 8.9498097e-01],

[4.1164526e-01, 2.6410934e-01, 8.6583102e-01, 4.6428421e-01],

[3.8654572e-01, 1.7934969e-01, 5.4316032e-01, 3.2028845e-01],

[3.6050096e-01, 6.2638736e-01, 4.6446508e-01, 7.1950281e-01],

[3.5996163e-01, 6.2458235e-01, 4.6350214e-01, 7.2000319e-01],

[7.1983784e-01, 6.2759423e-01, 8.6870378e-01, 7.0305586e-01],

[3.6646506e-01, 3.8801342e-01, 4.2244112e-01, 4.3741155e-01],

[3.5229647e-01, 5.4654634e-01, 3.8833630e-01, 5.7694817e-01],

[3.6912179e-01, 5.8222091e-01, 4.1025555e-01, 6.2288415e-01],

[3.7102419e-01, 5.9425962e-01, 4.3720052e-01, 6.3780034e-01],

[3.5444006e-01, 4.7450614e-01, 3.8303307e-01, 4.9580967e-01],

[7.1531910e-01, 5.7743710e-01, 8.7339032e-01, 6.8964785e-01],

[3.8982463e-01, 1.7769308e-01, 5.3870612e-01, 3.1275189e-01],

[3.5579172e-01, 5.5832678e-01, 3.9027628e-01, 5.8628565e-01],

[3.5591349e-01, 4.6058959e-01, 3.8360608e-01, 4.8057848e-01],

[3.4581965e-01, 4.7958860e-01, 3.6583403e-01, 4.9762627e-01],

[3.4433401e-01, 4.6549153e-01, 3.6546969e-01, 4.8306775e-01],

[6.8952668e-01, 2.8691068e-01, 8.4429270e-01, 3.8039652e-01],

[5.6328285e-01, 5.2353203e-01, 7.0950598e-01, 5.6712413e-01],

[3.2798409e-01, 5.0720298e-01, 3.4583938e-01, 5.2633405e-01],

[3.4163418e-01, 5.0696808e-01, 3.6424884e-01, 5.2613205e-01],

[3.4342399e-01, 4.9362123e-01, 3.6436176e-01, 5.1271427e-01],

[3.6820206e-01, 5.7783151e-01, 4.0318635e-01, 6.0513425e-01],

[3.7084836e-01, 6.0645926e-01, 4.4600427e-01, 6.5543246e-01],

[3.3108306e-01, 4.9567217e-01, 3.4666312e-01, 5.1192003e-01],

[3.4755635e-01, 5.5521488e-01, 3.6801580e-01, 5.7478154e-01],

[5.0978678e-01, 7.5387210e-01, 6.8267655e-01, 8.3977443e-01],

[3.6682692e-01, 5.6671417e-01, 3.9800602e-01, 5.9056568e-01],

[3.5695317e-01, 4.3974501e-01, 3.8740516e-01, 4.6231419e-01],

[3.5100028e-01, 5.3704566e-01, 3.8353223e-01, 5.6229430e-01],

[3.4340435e-01, 5.2385479e-01, 3.6579999e-01, 5.4249030e-01],

[4.2547816e-01, 5.8302408e-01, 5.0048357e-01, 6.2211710e-01],

[3.4608695e-01, 5.3925014e-01, 3.6758476e-01, 5.5907607e-01],

[3.2927078e-01, 4.5257777e-01, 3.4853238e-01, 4.6549708e-01],

[3.4211871e-01, 4.5176423e-01, 3.6421466e-01, 4.6783006e-01],

[3.3232009e-01, 4.8148558e-01, 3.4683454e-01, 4.9636772e-01],

[3.5374671e-01, 4.8738575e-01, 3.8187450e-01, 5.0869471e-01],

[5.4104501e-01, 7.4378175e-01, 6.8199605e-01, 8.0373544e-01],

[7.4930298e-01, 6.7912787e-04, 9.9816215e-01, 2.2687393e-01],

[4.8811194e-01, 3.8788208e-01, 6.5886742e-01, 4.4012472e-01],

[3.2798409e-01, 5.0720298e-01, 3.4583938e-01, 5.2633405e-01],

[3.6192855e-01, 5.7177728e-01, 3.8971961e-01, 5.9754187e-01],

[3.3015379e-01, 4.4123974e-01, 3.4820625e-01, 4.5039704e-01],

[3.5280249e-01, 3.7495017e-01, 3.9356515e-01, 3.8802660e-01],

[3.3108306e-01, 4.9567217e-01, 3.4666312e-01, 5.1192003e-01],

[3.3070818e-01, 4.6405959e-01, 3.4772059e-01, 4.7952139e-01],

[3.2927078e-01, 4.5257777e-01, 3.4853238e-01, 4.6549708e-01],

[3.9903688e-01, 2.8748375e-01, 4.9881092e-01, 4.1517448e-01],

[4.5144099e-01, 7.3607433e-01, 7.1501023e-01, 8.5484862e-01],

[3.5879132e-01, 3.7910637e-01, 4.1088080e-01, 3.9561763e-01],

[6.9514066e-01, 2.8370458e-01, 8.4553832e-01, 3.8235956e-01],

[3.3070818e-01, 4.6405959e-01, 3.4772059e-01, 4.7952139e-01],

[6.9514066e-01, 2.8370458e-01, 8.4553832e-01, 3.8235956e-01],

[3.6646506e-01, 3.8801342e-01, 4.2244112e-01, 4.3741155e-01],

[3.7016463e-01, 3.7109971e-01, 4.0168965e-01, 3.8679004e-01],

[3.2927078e-01, 4.5257777e-01, 3.4853238e-01, 4.6549708e-01],

[5.4758275e-01, 5.2163035e-01, 7.1873194e-01, 5.9703213e-01],

[3.3232009e-01, 4.8148558e-01, 3.4683454e-01, 4.9636772e-01],

[3.5280249e-01, 3.7495017e-01, 3.9356515e-01, 3.8802660e-01],

[3.6760172e-01, 5.8778751e-01, 4.2486581e-01, 6.3663971e-01],

[3.6506388e-01, 4.2663202e-01, 3.9124954e-01, 4.4209841e-01],

[4.1215658e-01, 3.4202617e-01, 8.8318264e-01, 6.1285341e-01],

[3.4818730e-01, 5.6928444e-01, 3.6841545e-01, 5.8634388e-01],

[3.5158402e-01, 3.6752591e-01, 3.9787045e-01, 3.8494465e-01],

[3.6561641e-01, 5.8981210e-01, 3.8907713e-01, 6.1613721e-01],

[3.4828454e-01, 5.8140194e-01, 3.6721477e-01, 5.9566200e-01],

[3.4462604e-01, 5.3514403e-01, 3.9644474e-01, 5.8370894e-01],

[3.6365288e-01, 5.9567219e-01, 3.9053750e-01, 6.3109928e-01],

[3.5137120e-01, 5.2337110e-01, 3.7918985e-01, 5.4226983e-01],

[4.9033237e-01, 3.8423172e-01, 6.1302155e-01, 4.1986457e-01],

[4.1236705e-01, 3.5832933e-01, 4.8268488e-01, 4.1150209e-01],

[4.1843241e-01, 6.0260159e-01, 4.9375936e-01, 6.3197130e-01],

[3.4338030e-01, 4.0502068e-01, 3.6552405e-01, 4.1651794e-01],

[4.2186460e-01, 7.0060635e-01, 8.9403832e-01, 8.9042270e-01],

[3.4787107e-01, 5.9927994e-01, 3.6625677e-01, 6.1748618e-01],

[7.1091282e-01, 2.7190217e-01, 8.2108068e-01, 3.5610101e-01],

[3.3015379e-01, 4.4123974e-01, 3.4820625e-01, 4.5039704e-01],

[3.2861453e-01, 5.2156895e-01, 3.4669027e-01, 5.3986579e-01],

[4.9491951e-01, 5.3556001e-01, 7.1317983e-01, 6.2238050e-01],

[3.1718564e-01, 4.4188350e-01, 3.3180881e-01, 4.5035261e-01],

[3.7084836e-01, 6.0645926e-01, 4.4600427e-01, 6.5543246e-01],

[3.8386708e-01, 5.8912140e-01, 4.2566502e-01, 6.2365121e-01],

[3.5371971e-01, 5.0196201e-01, 3.8005614e-01, 5.2139860e-01],

[5.9429705e-01, 4.1528758e-01, 6.5035301e-01, 4.4080022e-01],

[3.6265263e-01, 7.5203842e-01, 4.6041501e-01, 7.7500635e-01],

[3.4433401e-01, 4.6549153e-01, 3.6546969e-01, 4.8306775e-01],

[3.8074300e-01, 3.8487837e-01, 4.5196366e-01, 4.3506762e-01],

[2.5742817e-01, 6.0831654e-01, 2.8681454e-01, 6.2162197e-01],

[7.1172494e-01, 6.1941981e-01, 8.8754946e-01, 7.4794185e-01],

[5.6854314e-01, 7.4925357e-01, 7.2917712e-01, 8.5470790e-01],

[3.6275440e-01, 7.5717753e-01, 4.6149722e-01, 7.8934973e-01],

[5.5307239e-01, 5.2523065e-01, 6.6746283e-01, 5.5445266e-01],

[3.1785089e-01, 4.5512110e-01, 3.3233309e-01, 4.6439195e-01],

[4.1034609e-01, 3.8362762e-01, 4.7151357e-01, 4.1939363e-01],

[3.3015379e-01, 4.4123974e-01, 3.4820625e-01, 4.5039704e-01],

[5.6086457e-01, 4.0125006e-01, 6.4354509e-01, 4.3456870e-01],

[7.1788090e-01, 2.4195449e-01, 8.1452870e-01, 3.1889099e-01],

[3.5509771e-01, 3.8778654e-01, 3.9057735e-01, 3.9996943e-01]]],

dtype=float32)>}

print(result.keys())

# detect 결과는 100개를 기본으로 Detect 함(즉 Detect된 오브젝트는 무조건 100개. 그래서 tensor(array)는 100개 단위, num_detections는 100)

print(result['detection_boxes'].shape, result['detection_classes'].shape, result['detection_scores'].shape, result['num_detections'])

# dict_keys(['detection_classes', 'raw_detection_scores', 'raw_detection_boxes', 'detection_anchor_indices', 'detection_scores', 'detection_multiclass_scores', 'num_detections', 'detection_boxes'])

# (1, 100, 4) (1, 100) (1, 100) tf.Tensor([100.], shape=(1,), dtype=float32)

# detect된 object들은 detection score가 높은 순으로 array값을 순차적으로 채움.

print('#### detection_classes #####')

print(result['detection_classes'])

print('#### detection_scores #####')

print(result['detection_scores'])

#### detection_classes #####

tf.Tensor(

[[ 1. 1. 1. 1. 3. 3. 8. 41. 3. 3. 3. 3. 3. 41. 8. 3. 3. 3.

3. 31. 31. 3. 3. 3. 3. 3. 3. 3. 31. 3. 3. 3. 3. 1. 3. 10.

3. 3. 3. 31. 15. 32. 10. 3. 10. 1. 10. 3. 3. 3. 31. 1. 27. 10.

33. 8. 3. 1. 31. 10. 3. 8. 3. 1. 3. 1. 3. 3. 3. 3. 3. 32.

3. 1. 1. 31. 3. 31. 1. 3. 31. 10. 8. 3. 3. 32. 1. 1. 3. 10.

41. 31. 1. 31. 10. 3. 3. 32. 31. 3.]], shape=(1, 100), dtype=float32)

#### detection_scores #####

tf.Tensor(

[[0.94711405 0.935974 0.930035 0.89913994 0.625541 0.48422325

0.3482024 0.31519073 0.31252164 0.3096477 0.2892261 0.26785725

0.26200417 0.2544667 0.24920423 0.24709295 0.22555462 0.22262326

0.20144816 0.1989274 0.19818683 0.19184774 0.18933022 0.18806441

0.17253922 0.16980891 0.16840717 0.16792467 0.16733843 0.16649997

0.16592245 0.16406913 0.15594032 0.14497948 0.144825 0.14451697

0.14210103 0.13976108 0.13904284 0.1389742 0.13756527 0.13691278

0.13502952 0.13211057 0.13042402 0.12915237 0.12603028 0.124609

0.12447888 0.12250288 0.12192409 0.12113374 0.12100718 0.11963245

0.11917206 0.11773309 0.11646152 0.11581548 0.11349872 0.11340918

0.11095154 0.1098927 0.10858452 0.10815845 0.10694698 0.10670073

0.10592487 0.1055002 0.10536031 0.1041959 0.10418969 0.10327245

0.10291874 0.10186002 0.1013522 0.10121182 0.10055887 0.09975425

0.09954076 0.09945919 0.09853141 0.09829449 0.09807272 0.09666188

0.09628956 0.09607641 0.09603674 0.09379527 0.09334304 0.09319282

0.09244616 0.09126819 0.09113833 0.09095921 0.09013715 0.08883683

0.08855603 0.08836359 0.0877557 0.08759023]], shape=(1, 100), dtype=float32)

# bounding box 좌표는 ymin, xmin, ymax, xmax 순서로 반환됨. y가 먼저, x가 나중에 나오므로 반드시 주의해야 함.

# 좌표 값은 원본 이미지의 width, height로 0~1 사이값으로 정규화됨.

print('#### detection_boxes #####')

print(result['detection_boxes'])

#### detection_boxes #####

tf.Tensor(

[[[4.1179040e-01 6.3390382e-02 8.8111836e-01 2.6741692e-01]

[4.3304449e-01 4.7706339e-01 8.9250046e-01 6.8628871e-01]

[4.1960636e-01 6.8270773e-01 8.9688581e-01 8.9498097e-01]

[4.1164526e-01 2.6410934e-01 8.6583102e-01 4.6428421e-01]

[3.8654572e-01 1.7934969e-01 5.4316032e-01 3.2028845e-01]

[3.6050096e-01 6.2638736e-01 4.6446508e-01 7.1950281e-01]

[3.5996163e-01 6.2458235e-01 4.6350214e-01 7.2000319e-01]

[7.1983784e-01 6.2759423e-01 8.6870378e-01 7.0305586e-01]

[3.6646506e-01 3.8801342e-01 4.2244112e-01 4.3741155e-01]

[3.5229647e-01 5.4654634e-01 3.8833630e-01 5.7694817e-01]

[3.6912179e-01 5.8222091e-01 4.1025555e-01 6.2288415e-01]

[3.7102419e-01 5.9425962e-01 4.3720052e-01 6.3780034e-01]

[3.5444006e-01 4.7450614e-01 3.8303307e-01 4.9580967e-01]

[7.1531910e-01 5.7743710e-01 8.7339032e-01 6.8964785e-01]

[3.8982463e-01 1.7769308e-01 5.3870612e-01 3.1275189e-01]

[3.5579172e-01 5.5832678e-01 3.9027628e-01 5.8628565e-01]

[3.5591349e-01 4.6058959e-01 3.8360608e-01 4.8057848e-01]

[3.4581965e-01 4.7958860e-01 3.6583403e-01 4.9762627e-01]

[3.4433401e-01 4.6549153e-01 3.6546969e-01 4.8306775e-01]

[6.8952668e-01 2.8691068e-01 8.4429270e-01 3.8039652e-01]

[5.6328285e-01 5.2353203e-01 7.0950598e-01 5.6712413e-01]

[3.2798409e-01 5.0720298e-01 3.4583938e-01 5.2633405e-01]

[3.4163418e-01 5.0696808e-01 3.6424884e-01 5.2613205e-01]

[3.4342399e-01 4.9362123e-01 3.6436176e-01 5.1271427e-01]

[3.6820206e-01 5.7783151e-01 4.0318635e-01 6.0513425e-01]

[3.7084836e-01 6.0645926e-01 4.4600427e-01 6.5543246e-01]

[3.3108306e-01 4.9567217e-01 3.4666312e-01 5.1192003e-01]

[3.4755635e-01 5.5521488e-01 3.6801580e-01 5.7478154e-01]

[5.0978678e-01 7.5387210e-01 6.8267655e-01 8.3977443e-01]

[3.6682692e-01 5.6671417e-01 3.9800602e-01 5.9056568e-01]

[3.5695317e-01 4.3974501e-01 3.8740516e-01 4.6231419e-01]

[3.5100028e-01 5.3704566e-01 3.8353223e-01 5.6229430e-01]

[3.4340435e-01 5.2385479e-01 3.6579999e-01 5.4249030e-01]

[4.2547816e-01 5.8302408e-01 5.0048357e-01 6.2211710e-01]

[3.4608695e-01 5.3925014e-01 3.6758476e-01 5.5907607e-01]

[3.2927078e-01 4.5257777e-01 3.4853238e-01 4.6549708e-01]

[3.4211871e-01 4.5176423e-01 3.6421466e-01 4.6783006e-01]

[3.3232009e-01 4.8148558e-01 3.4683454e-01 4.9636772e-01]

[3.5374671e-01 4.8738575e-01 3.8187450e-01 5.0869471e-01]

[5.4104501e-01 7.4378175e-01 6.8199605e-01 8.0373544e-01]

[7.4930298e-01 6.7912787e-04 9.9816215e-01 2.2687393e-01]

[4.8811194e-01 3.8788208e-01 6.5886742e-01 4.4012472e-01]

[3.2798409e-01 5.0720298e-01 3.4583938e-01 5.2633405e-01]

[3.6192855e-01 5.7177728e-01 3.8971961e-01 5.9754187e-01]

[3.3015379e-01 4.4123974e-01 3.4820625e-01 4.5039704e-01]

[3.5280249e-01 3.7495017e-01 3.9356515e-01 3.8802660e-01]

[3.3108306e-01 4.9567217e-01 3.4666312e-01 5.1192003e-01]

[3.3070818e-01 4.6405959e-01 3.4772059e-01 4.7952139e-01]

[3.2927078e-01 4.5257777e-01 3.4853238e-01 4.6549708e-01]

[3.9903688e-01 2.8748375e-01 4.9881092e-01 4.1517448e-01]

[4.5144099e-01 7.3607433e-01 7.1501023e-01 8.5484862e-01]

[3.5879132e-01 3.7910637e-01 4.1088080e-01 3.9561763e-01]

[6.9514066e-01 2.8370458e-01 8.4553832e-01 3.8235956e-01]

[3.3070818e-01 4.6405959e-01 3.4772059e-01 4.7952139e-01]

[6.9514066e-01 2.8370458e-01 8.4553832e-01 3.8235956e-01]

[3.6646506e-01 3.8801342e-01 4.2244112e-01 4.3741155e-01]

[3.7016463e-01 3.7109971e-01 4.0168965e-01 3.8679004e-01]

[3.2927078e-01 4.5257777e-01 3.4853238e-01 4.6549708e-01]

[5.4758275e-01 5.2163035e-01 7.1873194e-01 5.9703213e-01]

[3.3232009e-01 4.8148558e-01 3.4683454e-01 4.9636772e-01]

[3.5280249e-01 3.7495017e-01 3.9356515e-01 3.8802660e-01]

[3.6760172e-01 5.8778751e-01 4.2486581e-01 6.3663971e-01]

[3.6506388e-01 4.2663202e-01 3.9124954e-01 4.4209841e-01]

[4.1215658e-01 3.4202617e-01 8.8318264e-01 6.1285341e-01]

[3.4818730e-01 5.6928444e-01 3.6841545e-01 5.8634388e-01]

[3.5158402e-01 3.6752591e-01 3.9787045e-01 3.8494465e-01]

[3.6561641e-01 5.8981210e-01 3.8907713e-01 6.1613721e-01]

[3.4828454e-01 5.8140194e-01 3.6721477e-01 5.9566200e-01]

[3.4462604e-01 5.3514403e-01 3.9644474e-01 5.8370894e-01]

[3.6365288e-01 5.9567219e-01 3.9053750e-01 6.3109928e-01]

[3.5137120e-01 5.2337110e-01 3.7918985e-01 5.4226983e-01]

[4.9033237e-01 3.8423172e-01 6.1302155e-01 4.1986457e-01]

[4.1236705e-01 3.5832933e-01 4.8268488e-01 4.1150209e-01]

[4.1843241e-01 6.0260159e-01 4.9375936e-01 6.3197130e-01]

[3.4338030e-01 4.0502068e-01 3.6552405e-01 4.1651794e-01]

[4.2186460e-01 7.0060635e-01 8.9403832e-01 8.9042270e-01]

[3.4787107e-01 5.9927994e-01 3.6625677e-01 6.1748618e-01]

[7.1091282e-01 2.7190217e-01 8.2108068e-01 3.5610101e-01]

[3.3015379e-01 4.4123974e-01 3.4820625e-01 4.5039704e-01]

[3.2861453e-01 5.2156895e-01 3.4669027e-01 5.3986579e-01]

[4.9491951e-01 5.3556001e-01 7.1317983e-01 6.2238050e-01]

[3.1718564e-01 4.4188350e-01 3.3180881e-01 4.5035261e-01]

[3.7084836e-01 6.0645926e-01 4.4600427e-01 6.5543246e-01]

[3.8386708e-01 5.8912140e-01 4.2566502e-01 6.2365121e-01]

[3.5371971e-01 5.0196201e-01 3.8005614e-01 5.2139860e-01]

[5.9429705e-01 4.1528758e-01 6.5035301e-01 4.4080022e-01]

[3.6265263e-01 7.5203842e-01 4.6041501e-01 7.7500635e-01]

[3.4433401e-01 4.6549153e-01 3.6546969e-01 4.8306775e-01]

[3.8074300e-01 3.8487837e-01 4.5196366e-01 4.3506762e-01]

[2.5742817e-01 6.0831654e-01 2.8681454e-01 6.2162197e-01]

[7.1172494e-01 6.1941981e-01 8.8754946e-01 7.4794185e-01]

[5.6854314e-01 7.4925357e-01 7.2917712e-01 8.5470790e-01]

[3.6275440e-01 7.5717753e-01 4.6149722e-01 7.8934973e-01]

[5.5307239e-01 5.2523065e-01 6.6746283e-01 5.5445266e-01]

[3.1785089e-01 4.5512110e-01 3.3233309e-01 4.6439195e-01]

[4.1034609e-01 3.8362762e-01 4.7151357e-01 4.1939363e-01]

[3.3015379e-01 4.4123974e-01 3.4820625e-01 4.5039704e-01]

[5.6086457e-01 4.0125006e-01 6.4354509e-01 4.3456870e-01]

[7.1788090e-01 2.4195449e-01 8.1452870e-01 3.1889099e-01]

[3.5509771e-01 3.8778654e-01 3.9057735e-01 3.9996943e-01]]], shape=(1, 100, 4), dtype=float32)

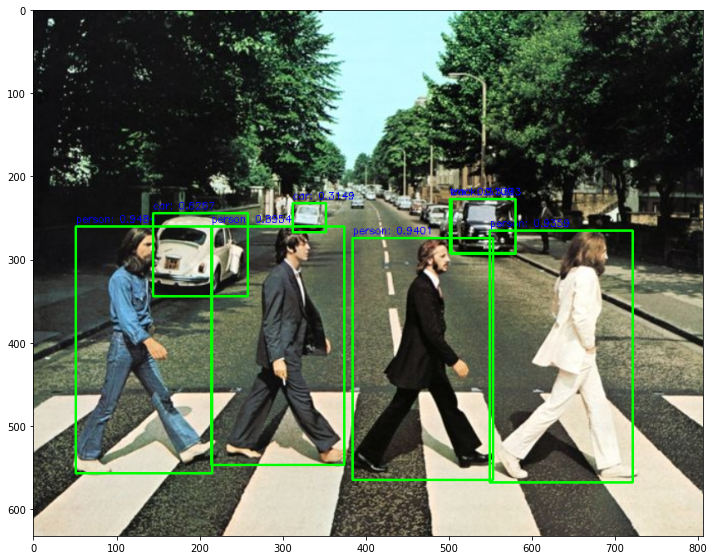

inference 결과를 이미지로 시각화

# result내의 value들을 모두 numpy로 변환.

result = {key:value.numpy() for key,value in result.items()}

# 1부터 91까지의 COCO Class id 매핑.

labels_to_names = {1:'person',2:'bicycle',3:'car',4:'motorcycle',5:'airplane',6:'bus',7:'train',8:'truck',9:'boat',10:'traffic light',

11:'fire hydrant',12:'street sign',13:'stop sign',14:'parking meter',15:'bench',16:'bird',17:'cat',18:'dog',19:'horse',20:'sheep',

21:'cow',22:'elephant',23:'bear',24:'zebra',25:'giraffe',26:'hat',27:'backpack',28:'umbrella',29:'shoe',30:'eye glasses',

31:'handbag',32:'tie',33:'suitcase',34:'frisbee',35:'skis',36:'snowboard',37:'sports ball',38:'kite',39:'baseball bat',40:'baseball glove',

41:'skateboard',42:'surfboard',43:'tennis racket',44:'bottle',45:'plate',46:'wine glass',47:'cup',48:'fork',49:'knife',50:'spoon',

51:'bowl',52:'banana',53:'apple',54:'sandwich',55:'orange',56:'broccoli',57:'carrot',58:'hot dog',59:'pizza',60:'donut',

61:'cake',62:'chair',63:'couch',64:'potted plant',65:'bed',66:'mirror',67:'dining table',68:'window',69:'desk',70:'toilet',

71:'door',72:'tv',73:'laptop',74:'mouse',75:'remote',76:'keyboard',77:'cell phone',78:'microwave',79:'oven',80:'toaster',

81:'sink',82:'refrigerator',83:'blender',84:'book',85:'clock',86:'vase',87:'scissors',88:'teddy bear',89:'hair drier',90:'toothbrush',

91:'hair brush'}

def get_detector(module_handle="https://tfhub.dev/tensorflow/efficientdet/d0/1"):

detector = hub.load(module_handle)

return detector

detector_model = get_detector()

WARNING:absl:Importing a function (__inference___call___32344) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_97451) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_bifpn_layer_call_and_return_conditional_losses_77595) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_103456) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_93843) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D0_layer_call_and_return_conditional_losses_107064) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_bifpn_layer_call_and_return_conditional_losses_75975) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

import cv2

img_array = cv2.cvtColor(cv2.imread('/content/data/beatles01.jpg'), cv2.COLOR_BGR2RGB)

# scaling된 이미지 기반으로 bounding box 위치가 예측 되므로 이를 다시 원복하기 위해 원본 이미지 shape정보 필요

height = img_array.shape[0]

width = img_array.shape[1]

# cv2의 rectangle()은 인자로 들어온 이미지 배열에 직접 사각형을 업데이트 하므로 그림 표현을 위한 별도의 이미지 배열 생성.

draw_img = img_array.copy()

# bounding box의 테두리와 caption 글자색 지정

green_color=(0, 255, 0)

red_color=(0, 0, 255)

# cv2로 만들어진 numpy image array를 tensor로 변환

img_tensor = tf.convert_to_tensor(img_array, dtype=tf.uint8)[tf.newaxis, ...]

#img_tensor = tf.convert_to_tensor(img_array, dtype=tf.float32)[tf.newaxis, ...]

# pretrained 모델을 다운로드 한 뒤 inference 수행.

result = detector_model(img_tensor)

# result 내부의 value를 numpy 로 변환.

result = {key:value.numpy() for key,value in result.items()}

SCORE_THRESHOLD = 0.3

OBJECT_DEFAULT_COUNT = 100

# detected 된 object들을 iteration 하면서 정보 추출. detect된 object의 갯수는 100개

for i in range(min(result['detection_scores'][0].shape[0], OBJECT_DEFAULT_COUNT)):

# detection score를 iteration시 마다 높은 순으로 추출하고 SCORE_THRESHOLD보다 낮으면 loop 중단.

score = result['detection_scores'][0, i]

if score < SCORE_THRESHOLD:

break

# detected된 object들은 scale된 기준으로 예측되었으므로 다시 원본 이미지 비율로 계산

box = result['detection_boxes'][0, i]

''' **** 주의 ******

box는 ymin, xmin, ymax, xmax 순서로 되어 있음. '''

left = box[1] * width

top = box[0] * height

right = box[3] * width

bottom = box[2] * height

# class id 추출하고 class 명으로 매핑

class_id = result['detection_classes'][0, i]

caption = "{}: {:.4f}".format(labels_to_names[class_id], score)

print(caption)

#cv2.rectangle()은 인자로 들어온 draw_img에 사각형을 그림. 위치 인자는 반드시 정수형.

cv2.rectangle(draw_img, (int(left), int(top)), (int(right), int(bottom)), color=green_color, thickness=2)

cv2.putText(draw_img, caption, (int(left), int(top - 5)), cv2.FONT_HERSHEY_SIMPLEX, 0.4, red_color, 1)

plt.figure(figsize=(12, 12))

plt.imshow(draw_img)

person: 0.9484

person: 0.9401

person: 0.9359

person: 0.8954

car: 0.6267

car: 0.5109

truck: 0.3303

car: 0.3149

import time

def get_detected_img(model, img_array, score_threshold, object_show_count=100, is_print=True):

# scaling된 이미지 기반으로 bounding box 위치가 예측 되므로 이를 다시 원복하기 위해 원본 이미지 shape정보 필요

height = img_array.shape[0]

width = img_array.shape[1]

# cv2의 rectangle()은 인자로 들어온 이미지 배열에 직접 사각형을 업데이트 하므로 그림 표현을 위한 별도의 이미지 배열 생성.

draw_img = img_array.copy()

# bounding box의 테두리와 caption 글자색 지정

green_color=(0, 255, 0)

red_color=(0, 0, 255)

# cv2로 만들어진 numpy image array를 tensor로 변환

img_tensor = tf.convert_to_tensor(img_array, dtype=tf.uint8)[tf.newaxis, ...]

#img_tensor = tf.convert_to_tensor(img_array, dtype=tf.float32)[tf.newaxis, ...]

# efficientdet모델로 inference 수행.

start_time = time.time()

# inference 결과로 내부 원소가 Tensor이 Dict 반환

result = model(img_tensor)

# result 내부의 value를 numpy 로 변환.

result = {key:value.numpy() for key,value in result.items()}

# detected 된 object들을 iteration 하면서 정보 추출. detect된 object의 갯수는 100개

for i in range(min(result['detection_scores'][0].shape[0], object_show_count)):

# detection score를 iteration시 마다 높은 순으로 추출하고 SCORE_THRESHOLD보다 낮으면 loop 중단.

score = result['detection_scores'][0, i]

if score < score_threshold:

break

# detected된 object들은 scale된 기준으로 예측되었으므로 다시 원본 이미지 비율로 계산

box = result['detection_boxes'][0, i]

''' **** 주의 ******

box는 ymin, xmin, ymax, xmax 순서로 되어 있음. '''

left = box[1] * width

top = box[0] * height

right = box[3] * width

bottom = box[2] * height

# class id 추출하고 class 명으로 매핑

class_id = result['detection_classes'][0, i]

caption = "{}: {:.4f}".format(labels_to_names[class_id], score)

print(caption)

#cv2.rectangle()은 인자로 들어온 draw_img에 사각형을 그림. 위치 인자는 반드시 정수형.

cv2.rectangle(draw_img, (int(left), int(top)), (int(right), int(bottom)), color=green_color, thickness=2)

cv2.putText(draw_img, caption, (int(left), int(top - 5)), cv2.FONT_HERSHEY_SIMPLEX, 0.4, red_color, 1)

if is_print:

print('Detection 수행시간:',round(time.time() - start_time, 2),"초")

return draw_img

img_array = cv2.cvtColor(cv2.imread('/content/data/beatles01.jpg'), cv2.COLOR_BGR2RGB)

draw_img = get_detected_img(detector_model, img_array, score_threshold=0.4, object_show_count=100, is_print=True)

plt.figure(figsize=(12, 12))

plt.imshow(draw_img)

person: 0.9484

person: 0.9401

person: 0.9359

person: 0.8954

car: 0.6267

car: 0.5109

Detection 수행시간: 0.36 초

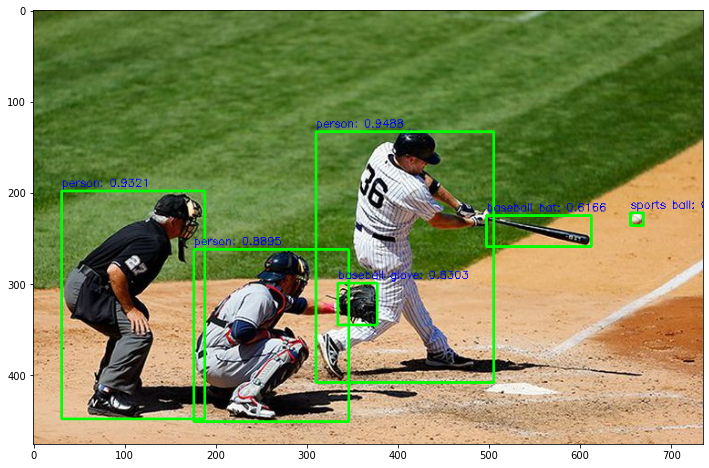

!wget -O ./data/baseball01.jpg https://raw.githubusercontent.com/chulminkw/DLCV/master/data/image/baseball01.jpg

img_array = cv2.cvtColor(cv2.imread('/content/data/baseball01.jpg'), cv2.COLOR_BGR2RGB)

draw_img = get_detected_img(detector_model, img_array, score_threshold=0.4, object_show_count=100, is_print=True)

plt.figure(figsize=(12, 12))

plt.imshow(draw_img)

person: 0.9570

person: 0.9252

person: 0.9068

baseball glove: 0.6338

baseball bat: 0.5929

Detection 수행시간: 0.34 초

EfficientDet D2 모델로 Inference 수행.

detector_model_d2 = get_detector('https://tfhub.dev/tensorflow/efficientdet/d2/1')

WARNING:absl:Importing a function (__inference_EfficientDet-D2_layer_call_and_return_conditional_losses_130857) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference___call___38449) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D2_layer_call_and_return_conditional_losses_145024) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_bifpn_layer_call_and_return_conditional_losses_99017) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D2_layer_call_and_return_conditional_losses_139687) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_EfficientDet-D2_layer_call_and_return_conditional_losses_125520) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

WARNING:absl:Importing a function (__inference_bifpn_layer_call_and_return_conditional_losses_101605) with ops with unsaved custom gradients. Will likely fail if a gradient is requested.

img_array = cv2.cvtColor(cv2.imread('/content/data/baseball01.jpg'), cv2.COLOR_BGR2RGB)

draw_img = get_detected_img(detector_model_d2, img_array, score_threshold=0.4, object_show_count=100, is_print=True)

plt.figure(figsize=(12, 12))

plt.imshow(draw_img)

person: 0.9488

person: 0.9321

person: 0.8895

baseball glove: 0.8303

baseball bat: 0.6166

sports ball: 0.5037

Detection 수행시간: 7.82 초

Video Inference 수행

!wget -O ./data/Jonh_Wick_small.mp4 https://github.com/chulminkw/DLCV/blob/master/data/video/John_Wick_small.mp4?raw=true

def do_detected_video(model, input_path, output_path, score_threshold, is_print):

cap = cv2.VideoCapture(input_path)

codec = cv2.VideoWriter_fourcc(*'XVID')

vid_size = (round(cap.get(cv2.CAP_PROP_FRAME_WIDTH)),round(cap.get(cv2.CAP_PROP_FRAME_HEIGHT)))

vid_fps = cap.get(cv2.CAP_PROP_FPS)

vid_writer = cv2.VideoWriter(output_path, codec, vid_fps, vid_size)

frame_cnt = int(cap.get(cv2.CAP_PROP_FRAME_COUNT))

print('총 Frame 갯수:', frame_cnt)

green_color=(0, 255, 0)

red_color=(0, 0, 255)

while True:

hasFrame, img_frame = cap.read()

if not hasFrame:

print('더 이상 처리할 frame이 없습니다.')

break

img_frame = get_detected_img(model, img_frame, score_threshold=score_threshold, object_show_count=100, is_print=is_print)

vid_writer.write(img_frame)

# end of while loop

vid_writer.release()

cap.release()

do_detected_video(detector_model, '/content/data/Jonh_Wick_small.mp4', './data/John_Wick_small_02.mp4', 0.5, True)

총 Frame 갯수: 58

car: 0.7054

car: 0.6741

car: 0.6634

car: 0.6490

Detection 수행시간: 0.3 초

car: 0.7121

car: 0.6782

car: 0.6640

car: 0.6493

Detection 수행시간: 0.31 초

car: 0.6018

motorcycle: 0.5719

car: 0.5339

person: 0.5157

car: 0.5006

person: 0.5002

Detection 수행시간: 0.33 초

motorcycle: 0.5697

car: 0.5522

car: 0.5182

person: 0.5053

Detection 수행시간: 0.3 초

car: 0.6813

motorcycle: 0.5480

car: 0.5432

person: 0.5387

Detection 수행시간: 0.3 초

car: 0.6372

motorcycle: 0.5610

car: 0.5422

Detection 수행시간: 0.29 초

car: 0.6398

motorcycle: 0.5655

car: 0.5501

car: 0.5255

Detection 수행시간: 0.28 초

car: 0.7404

car: 0.7017

car: 0.6398

motorcycle: 0.5679

person: 0.5268

Detection 수행시간: 0.29 초

car: 0.6955

car: 0.6933

car: 0.6458

motorcycle: 0.5257

Detection 수행시간: 0.29 초

car: 0.7060

car: 0.6844

car: 0.6742

motorcycle: 0.5419

Detection 수행시간: 0.29 초

car: 0.7386

car: 0.6630

car: 0.6616

motorcycle: 0.5867

Detection 수행시간: 0.29 초

car: 0.7788

car: 0.7119

car: 0.6659

motorcycle: 0.5716

Detection 수행시간: 0.29 초

car: 0.7903

person: 0.6799

car: 0.6535

car: 0.5919

motorcycle: 0.5642

person: 0.5333

Detection 수행시간: 0.29 초

car: 0.7585

car: 0.6350

person: 0.6308

person: 0.5748

car: 0.5594

Detection 수행시간: 0.29 초

car: 0.7656

person: 0.6383

car: 0.6349

car: 0.5677

person: 0.5621

motorcycle: 0.5030

Detection 수행시간: 0.28 초

car: 0.7444

car: 0.6351

person: 0.6318

car: 0.5491

motorcycle: 0.5110

Detection 수행시간: 0.3 초

car: 0.7239

car: 0.6659

person: 0.6601

motorcycle: 0.6324

person: 0.5235

Detection 수행시간: 0.29 초

car: 0.6936

person: 0.6540

car: 0.6427

motorcycle: 0.6184

Detection 수행시간: 0.29 초

person: 0.7235

car: 0.7211

car: 0.6419

motorcycle: 0.6291

car: 0.5875

car: 0.5150

Detection 수행시간: 0.29 초

car: 0.7176

person: 0.7174

car: 0.6390

motorcycle: 0.6266

car: 0.5862

car: 0.5188

Detection 수행시간: 0.3 초

car: 0.7741

person: 0.7211

motorcycle: 0.6405

car: 0.5664

car: 0.5461

person: 0.5386

Detection 수행시간: 0.28 초

car: 0.7878

person: 0.7171

car: 0.6266

motorcycle: 0.6054

car: 0.5526

Detection 수행시간: 0.28 초

car: 0.7586

car: 0.6461

car: 0.6202

person: 0.6173

person: 0.5188

car: 0.5160

Detection 수행시간: 0.31 초

person: 0.6640

person: 0.6427

car: 0.6098

horse: 0.6051

bicycle: 0.5354

Detection 수행시간: 0.27 초

person: 0.6494

person: 0.6488

horse: 0.6284

car: 0.5893

bicycle: 0.5526

Detection 수행시간: 0.28 초

person: 0.5867

person: 0.5624

bicycle: 0.5620

horse: 0.5345

Detection 수행시간: 0.29 초

person: 0.7165

car: 0.6705

horse: 0.5624

bicycle: 0.5571

person: 0.5267

Detection 수행시간: 0.3 초

person: 0.7392

horse: 0.6653

person: 0.6073

car: 0.5965

motorcycle: 0.5671

Detection 수행시간: 0.29 초

person: 0.8238

horse: 0.7039

car: 0.7006

motorcycle: 0.6065

person: 0.5841

Detection 수행시간: 0.28 초

person: 0.8135

horse: 0.7154

car: 0.7002

motorcycle: 0.6138

person: 0.5630

Detection 수행시간: 0.3 초

person: 0.8248

horse: 0.6896

person: 0.6198

car: 0.6137

motorcycle: 0.5657

Detection 수행시간: 0.29 초

person: 0.7325

horse: 0.6855

car: 0.6851

person: 0.6655

motorcycle: 0.6141

Detection 수행시간: 0.28 초

car: 0.7927

person: 0.7040

horse: 0.6783

person: 0.6495

motorcycle: 0.5853

Detection 수행시간: 0.27 초

car: 0.7433

person: 0.5885

person: 0.5693

bicycle: 0.5155

Detection 수행시간: 0.28 초

car: 0.7356

person: 0.5936

person: 0.5531

bicycle: 0.5072

Detection 수행시간: 0.28 초

car: 0.6917

person: 0.6702

person: 0.6286

motorcycle: 0.6036

Detection 수행시간: 0.29 초

car: 0.7995

person: 0.6749

motorcycle: 0.6159

person: 0.5632

horse: 0.5463

Detection 수행시간: 0.3 초

car: 0.7973

person: 0.6764

person: 0.6213

Detection 수행시간: 0.29 초

car: 0.8104

person: 0.6457

person: 0.6258

motorcycle: 0.5341

Detection 수행시간: 0.29 초

car: 0.8288

person: 0.6424

person: 0.6219

motorcycle: 0.5400

Detection 수행시간: 0.29 초

car: 0.8241

person: 0.6231

person: 0.5867

car: 0.5452

Detection 수행시간: 0.3 초

car: 0.8452

car: 0.7434

horse: 0.6338

person: 0.5680

person: 0.5553

Detection 수행시간: 0.29 초

car: 0.8632

car: 0.6939

horse: 0.6710

person: 0.5565

Detection 수행시간: 0.28 초

car: 0.8645

horse: 0.7133

car: 0.6535

person: 0.6077

Detection 수행시간: 0.29 초

car: 0.8637

horse: 0.7250

car: 0.6580

person: 0.6062

Detection 수행시간: 0.28 초

car: 0.8458

car: 0.6883

person: 0.6304

Detection 수행시간: 0.29 초

car: 0.8172

car: 0.5968

car: 0.5710

person: 0.5665

Detection 수행시간: 0.3 초

car: 0.7921

car: 0.6119

horse: 0.5238

Detection 수행시간: 0.28 초

car: 0.7709

car: 0.6165

person: 0.5716

car: 0.5662

horse: 0.5606

Detection 수행시간: 0.29 초

car: 0.7777

car: 0.6200

car: 0.5678

person: 0.5671

horse: 0.5564

Detection 수행시간: 0.29 초

car: 0.7557

horse: 0.5966

person: 0.5510

Detection 수행시간: 0.29 초

car: 0.7844

person: 0.5607

horse: 0.5251

Detection 수행시간: 0.3 초

car: 0.7524

person: 0.5303

Detection 수행시간: 0.3 초

car: 0.7289

car: 0.5995

Detection 수행시간: 0.3 초

car: 0.7325

car: 0.6154

Detection 수행시간: 0.3 초

car: 0.7161

car: 0.6772

car: 0.5412

horse: 0.5058

Detection 수행시간: 0.3 초

car: 0.7333

car: 0.7279

car: 0.6378

Detection 수행시간: 0.31 초

car: 0.8108

car: 0.7212

car: 0.7009

horse: 0.5520

Detection 수행시간: 0.29 초

더 이상 처리할 frame이 없습니다.

반응형

'Computer_Science > RetinaNet' 카테고리의 다른 글

| 11-6~8. automl efficientDet (0) | 2021.11.30 |

|---|---|

| 11-4. tensorflow hub의 efficientDet lite 모델을 이용한 inference 실습 (0) | 2021.11.30 |

| 11-1. AutoML EfficientDet 패키지 소개 (0) | 2021.11.25 |

| 10-7. Compound Scaling (0) | 2021.10.29 |

| 10-6. EfficientNet (0) | 2021.10.29 |