728x90

반응형

# 버전 확인

import torch

print(torch.__version__)# mmcv를 위해서 mmcv-full을 설치해야 함.

!pip install mmcv-fullfrom google.colab import drive

drive.mount('/content/drive')!git clone https://github.com/open-mmlab/mmdetection.git

!cd mmdetection; python setup.py install# 아래를 수행하기 전에 kernel을 restart 해야 함.

# 런타임 초기화하면 설치한 파일 날라감

from mmdet.apis import init_detector, inference_detector

import mmcvMS-COCO 데이터 기반으로 Faster RCNN Pretrained 모델을 활용하여 Inference 수행

- Faster RCNN Pretrained 모델 다운로드

- Faster RCNN용 Config 파일 설정.

- Inference 용 모델을 생성하고, Inference 적용

# pretrained weight 모델을 다운로드 받기 위해서 mmdetection/checkpoints 디렉토리를 만듬.

!cd mmdetection; mkdir checkpoints# pretrained model faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth 설치

!wget -O /content/mmdetection/checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth http://download.openmmlab.com/mmdetection/v2.0/faster_rcnn/faster_rcnn_r50_fpn_1x_coco/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth# pretrained model 경로 확인

!ls -lia /content/mmdetection/checkpoints

# total 163376

# 3951436 drwxr-xr-x 2 root root 4096 Oct 10 13:13 .

# 3932841 drwxr-xr-x 19 root root 4096 Oct 10 13:13 ..

# 3951438 -rw-r--r-- 1 root root 167287506 Aug 28 2020 faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth

# config 파일을 설정하고, 다운로드 받은 pretrained 모델을 checkpoint로 설정.

config_file = '/content/mmdetection/configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py'

checkpoint_file = '/content/mmdetection/checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth'# config 파일과 pretrained 모델을 기반으로 Detector 모델을 생성.

from mmdet.apis import init_detector, inference_detector

model = init_detector(config_file, checkpoint_file, device='cuda:0') # gpu 지정

# /usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/builder.py:17: UserWarning: ``build_anchor_generator`` would be deprecated soon, please use ``build_prior_generator``

# '``build_anchor_generator`` would be deprecated soon, please use '

# Use load_from_local loader# mmdetection은 상대 경로를 인자로 주면 무조건 mmdetection 디렉토리를 기준으로 함.

%cd mmdetection

from mmdet.apis import init_detector, inference_detector

# init_detector() 인자로 config와 checkpoint를 입력함.

model = init_detector(config='configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py', checkpoint='checkpoints/faster_rcnn_r50_fpn_1x_coco_20200130-047c8118.pth')

# /content/mmdetection

# /usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/builder.py:17: UserWarning: ``build_anchor_generator`` would be deprecated soon, please use ``build_prior_generator``

# '``build_anchor_generator`` would be deprecated soon, please use '

# Use load_from_local loader# mmdetection/configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py - _base_

=> 모델 별로 각각 다른 config를 가지는게 아니라 공통의 config를 지님

mmdetection/configs/_base_/models/fast_rcnn_r50_fpn.py에 neck=dict에 type=fpn으로 되어있음

참고로 나중에 커스컴 할 때 num_classes = n을 바꿔야함, coco dataset이 n개라서

%cd /content

# /contentimport cv2

import matplotlib.pyplot as plt

img = '/content/mmdetection/demo/demo.jpg'

img_arr = cv2.cvtColor(cv2.imread(img), cv2.COLOR_BGR2RGB)

plt.figure(figsize=(12, 12))

plt.imshow(img_arr)

img = '/content/mmdetection/demo/demo.jpg'

# inference_detector의 인자로 string(file경로), ndarray가 단일 또는 list형태로 입력 될 수 있음.

results = inference_detector(model, img)

# /usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/datasets/utils.py:69: UserWarning: "ImageToTensor" pipeline is replaced by "DefaultFormatBundle" for batch inference. It is recommended to manually replace it in the test data pipeline in your config file.

# 'data pipeline in your config file.', UserWarning)

# /usr/local/lib/python3.7/dist-packages/torch/nn/functional.py:718: UserWarning: Named tensors and all their associated APIs are an experimental feature and subject to change. Please do not use them for anything important until they are released as stable. (Triggered internally at /pytorch/c10/core/TensorImpl.h:1156.)

# return torch.max_pool2d(input, kernel_size, stride, padding, dilation, ceil_mode)

# /usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/anchor_generator.py:324: UserWarning: ``grid_anchors`` would be deprecated soon. Please use ``grid_priors``

# warnings.warn('``grid_anchors`` would be deprecated soon. '

# /usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/anchor_generator.py:361: UserWarning: ``single_level_grid_anchors`` would be deprecated soon. Please use ``single_level_grid_priors``

# '``single_level_grid_anchors`` would be deprecated soon. 'type(results), len(results)

# (list, 80)

# len이 80개인데, detected 가 80개가 아니라 cocodataset이 80개임MS-COCO pretrained model의 inference 반환 결과

# results는 list형으로 coco class의 0부터 79까지 class_id별로 80개의 array를 가짐.

# 개별 array들은 각 클래스별로 5개의 값(좌표값과 class별로 confidence)을 가짐. 개별 class별로 여러개의 좌표를 가지면 여러개의 array가 생성됨.

# 좌표는 좌상단(xmin, ymin), 우하단(xmax, ymax) 기준.

# 개별 array의 shape는 (Detection된 object들의 수, 5(좌표와 confidence)) 임

results

class)id 0:'person', 1:'bicycle, 2:'car'

[array([[3.75348572e+02, 1.19171005e+02, 3.81950867e+02, 1.34460617e+02,

1.35454327e-01], # 좌표값 좌상단(xmin, ymin), 우하단(xmax, ymax), class confidence

[5.32362000e+02, 1.09554726e+02, 5.40526550e+02, 1.25222633e+02,

8.88783410e-02], # 좌표값 좌상단(xmin, ymin), 우하단(xmax, ymax), class confidence

[3.61124298e+02, 1.09049202e+02, 3.68625610e+02, 1.22483063e+02,

7.20723420e-02]], dtype=float32), # 좌표값 좌상단(xmin, ymin), 우하단(xmax, ymax), class confidence

# class id는 별도로 없고, results[0]이 0번임

# 내부에서 정규화를 해버림

array([], shape=(0, 5), dtype=float32), # 0번 class id는 detect가 안됬다.

array([[6.09650024e+02, 1.13805893e+02, 6.34511658e+02, 1.36951904e+02,

9.88766134e-01],

[4.81773712e+02, 1.10480980e+02, 5.22459717e+02, 1.30407104e+02,

9.87157285e-01],

[1.01822114e+00, 1.12144730e+02, 6.04374733e+01, 1.44173752e+02,

9.83206093e-01],

[2.94623718e+02, 1.17035240e+02, 3.78022705e+02, 1.50550873e+02,

9.71326768e-01],

[3.96328979e+02, 1.11203323e+02, 4.32490540e+02, 1.32729263e+02,

9.67802167e-01],

[5.90976257e+02, 1.10802658e+02, 6.15401794e+02, 1.26493553e+02,

9.59414959e-01],

[2.67582001e+02, 1.05686005e+02, 3.28818756e+02, 1.28226547e+02,

9.59253132e-01],

[1.66856735e+02, 1.08006607e+02, 2.19100693e+02, 1.40194809e+02,

9.56841350e-01],

[1.89769577e+02, 1.09801109e+02, 3.00310852e+02, 1.53781891e+02,

9.51012135e-01],

[4.29822540e+02, 1.05655380e+02, 4.82741516e+02, 1.32376724e+02,

9.45849955e-01],

[5.55000916e+02, 1.09785004e+02, 5.92761780e+02, 1.27808495e+02,

9.43992615e-01],

[5.96790352e+01, 9.31828003e+01, 8.34545441e+01, 1.06242912e+02,

9.33143973e-01],

[9.78446579e+01, 8.96542969e+01, 1.18172356e+02, 1.01011108e+02,

8.66323531e-01],

[1.43898987e+02, 9.61869888e+01, 1.64599792e+02, 1.04979256e+02,

8.26784551e-01],

[8.55894241e+01, 8.99445801e+01, 9.88920746e+01, 9.85285416e+01,

7.53480315e-01],

[9.78282700e+01, 9.07443695e+01, 1.10298050e+02, 9.97373276e+01,

7.16600597e-01],

[2.23579224e+02, 9.85184631e+01, 2.49845108e+02, 1.07509857e+02,

6.00782037e-01],

[1.68928635e+02, 9.59468994e+01, 1.82843445e+02, 1.05694962e+02,

5.91998756e-01],

[1.35021347e+02, 9.08739395e+01, 1.50607025e+02, 1.02798874e+02,

5.54030240e-01],

[0.00000000e+00, 1.11521950e+02, 1.45326691e+01, 1.25850288e+02,

5.43519914e-01],

[5.53896606e+02, 1.16170540e+02, 5.62602295e+02, 1.26390923e+02,

4.76758897e-01],

[3.75809753e+02, 1.19579056e+02, 3.82376495e+02, 1.32113892e+02,

4.61191744e-01],

[1.37924118e+02, 9.37975311e+01, 1.54497177e+02, 1.04659683e+02,

4.00998443e-01],

[5.55009033e+02, 1.10952698e+02, 5.74925659e+02, 1.26912033e+02,

3.43850523e-01],

[5.54043152e+02, 1.00959076e+02, 5.61297913e+02, 1.10927711e+02,

2.87963450e-01],

[6.14741028e+02, 1.01987068e+02, 6.35481628e+02, 1.12593704e+02,

2.61200219e-01],

[5.70760315e+02, 1.09679398e+02, 5.90286133e+02, 1.27248878e+02,

2.58404434e-01],

[4.78544116e-01, 1.11568169e+02, 2.25040989e+01, 1.42623535e+02,

2.56050110e-01],

[3.75093140e+02, 1.11696442e+02, 4.20536804e+02, 1.33691055e+02,

2.55963236e-01],

[2.62747284e+02, 1.07565620e+02, 3.26765930e+02, 1.43925293e+02,

2.09969625e-01],

[7.91312714e+01, 9.03788834e+01, 1.00247879e+02, 1.01080894e+02,

2.03962341e-01],

[6.09313477e+02, 1.13308510e+02, 6.25961975e+02, 1.25342514e+02,

1.97422847e-01],

[1.35304840e+02, 9.23771439e+01, 1.64080185e+02, 1.04992455e+02,

1.49973527e-01],

[6.73540573e+01, 8.85008087e+01, 8.29853592e+01, 9.73942108e+01,

1.48383990e-01],

[5.40852417e+02, 1.13848946e+02, 5.61855530e+02, 1.26198776e+02,

1.47629887e-01],

[3.51735046e+02, 1.09432655e+02, 4.39310089e+02, 1.34819733e+02,

1.41735107e-01],

[9.63179016e+01, 8.98780594e+01, 1.53287781e+02, 1.01776367e+02,

1.32707968e-01],

[4.54495049e+01, 1.17444977e+02, 6.18955803e+01, 1.44275055e+02,

1.25890508e-01],

[6.06407532e+02, 1.12215973e+02, 6.18935669e+02, 1.24957237e+02,

1.10722415e-01],

[1.02152626e+02, 9.36143646e+01, 1.41081863e+02, 1.01598961e+02,

8.13643038e-02],

[3.98364838e+02, 1.12081459e+02, 4.09389862e+02, 1.32897766e+02,

7.64543191e-02],

[5.39245911e+02, 1.12394836e+02, 5.48756714e+02, 1.21964462e+02,

7.32642636e-02],

[6.09156555e+02, 1.04017456e+02, 6.35472107e+02, 1.26777176e+02,

6.47417754e-02],

[3.75894284e+00, 9.85745239e+01, 7.45848312e+01, 1.35154999e+02,

6.32166639e-02],

[1.68166473e+02, 9.14260483e+01, 2.20303146e+02, 1.07955681e+02,

5.16179129e-02],

[7.09724045e+01, 9.02684860e+01, 1.05398132e+02, 1.03825508e+02,

5.15376776e-02]], dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([[1.8820007e+02, 1.0994706e+02, 3.0047137e+02, 1.5633583e+02,

9.7509645e-02],

[4.2774915e+02, 1.0511559e+02, 4.8345541e+02, 1.3294328e+02,

9.6882291e-02],

[2.9450479e+02, 1.1764229e+02, 3.7863284e+02, 1.5046356e+02,

7.4364766e-02]], dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([[2.1920888e+02, 1.7456262e+02, 4.6010886e+02, 3.7704660e+02,

9.7778010e-01],

[3.7206638e+02, 1.3631432e+02, 4.3219531e+02, 1.8717290e+02,

4.1699746e-01]], dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([[9.13259964e+01, 1.07155769e+02, 1.06029366e+02, 1.19777306e+02,

1.15152948e-01]], dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([[3.72651917e+02, 1.36143082e+02, 4.32053833e+02, 1.88446472e+02,

7.77875960e-01],

[2.18404114e+02, 1.75137848e+02, 4.62107605e+02, 3.65541260e+02,

1.01236075e-01]], dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32),

array([], shape=(0, 5), dtype=float32)]요약

# 2차원 배열로 표현됨

# 사람, 자전거, 차,

results[0].shape, results[1].shape, results[2].shape, results[3].shape

# ((3, 5), (0, 5), (46, 5), (0, 5))

# 3건, 0건, 46건, 0건결과 직접 확인

from mmdet.apis import show_result_pyplot

# inference 된 결과를 원본 이미지에 적용하여 새로운 image로 생성(bbox 처리된 image)

# Default로 score threshold가 0.3 이상인 Object들만 시각화 적용. show_result_pyplot은 model.show_result()를 호출.

show_result_pyplot(model, img, results)

Model의 Config 설정 확인하기

# configs 파일로 모델을 만들었는데 모델 객체안의 변수 확인

model.__dict__

# 80개 class

{'CLASSES': ('person',

'bicycle',

'car',

'motorcycle',

'airplane',

'bus',

'train',

'truck',

'boat',

'traffic_light',

'fire_hydrant',

'stop_sign',

'parking_meter',

'bench',

'bird',

'cat',

'dog',

'horse',

'sheep',

'cow',

'elephant',

'bear',

'zebra',

'giraffe',

'backpack',

'umbrella',

'handbag',

'tie',

'suitcase',

'frisbee',

'skis',

'snowboard',

'sports_ball',

'kite',

'baseball_bat',

'baseball_glove',

'skateboard',

'surfboard',

'tennis_racket',

'bottle',

'wine_glass',

'cup',

'fork',

'knife',

'spoon',

'bowl',

'banana',

'apple',

'sandwich',

'orange',

'broccoli',

'carrot',

'hot_dog',

'pizza',

'donut',

'cake',

'chair',

'couch',

'potted_plant',

'bed',

'dining_table',

'toilet',

'tv',

'laptop',

'mouse',

'remote',

'keyboard',

'cell_phone',

'microwave',

'oven',

'toaster',

'sink',

'refrigerator',

'book',

'clock',

'vase',

'scissors',

'teddy_bear',

'hair_drier',

'toothbrush'),

'_modules': OrderedDict([('backbone', ResNet(

# 모듈은 pytorch, backbone : resnet

'cfg': Config (path: configs/faster_rcnn/faster_rcnn_r50_fpn_1x_coco.py)#print(model.cfg) # 안이쁘게 나옴

print(model.cfg.pretty_text)

model = dict(

type='FasterRCNN',

backbone=dict(

type='ResNet',

depth=50,

num_stages=4,

out_indices=(0, 1, 2, 3),

frozen_stages=1,

norm_cfg=dict(type='BN', requires_grad=True),

norm_eval=True,

style='pytorch',

init_cfg=dict(type='Pretrained', checkpoint='torchvision://resnet50')),

neck=dict(

type='FPN',

in_channels=[256, 512, 1024, 2048],

out_channels=256,

num_outs=5),

rpn_head=dict(

type='RPNHead',

in_channels=256,

feat_channels=256,

anchor_generator=dict(

type='AnchorGenerator',

scales=[8],

ratios=[0.5, 1.0, 2.0],

strides=[4, 8, 16, 32, 64]),

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[1.0, 1.0, 1.0, 1.0]),

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=True, loss_weight=1.0),

loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

roi_head=dict(

type='StandardRoIHead',

bbox_roi_extractor=dict(

type='SingleRoIExtractor',

roi_layer=dict(type='RoIAlign', output_size=7, sampling_ratio=0),

out_channels=256,

featmap_strides=[4, 8, 16, 32]),

bbox_head=dict(

type='Shared2FCBBoxHead',

in_channels=256,

fc_out_channels=1024,

roi_feat_size=7,

num_classes=80,

bbox_coder=dict(

type='DeltaXYWHBBoxCoder',

target_means=[0.0, 0.0, 0.0, 0.0],

target_stds=[0.1, 0.1, 0.2, 0.2]),

reg_class_agnostic=False,

loss_cls=dict(

type='CrossEntropyLoss', use_sigmoid=False, loss_weight=1.0),

loss_bbox=dict(type='L1Loss', loss_weight=1.0)),

train_cfg=None,

test_cfg=dict(

score_thr=0.05,

nms=dict(type='nms', iou_threshold=0.5),

max_per_img=100),

pretrained=None),

train_cfg=None,

test_cfg=dict(

rpn=dict(

nms_pre=1000,

max_per_img=1000,

nms=dict(type='nms', iou_threshold=0.7),

min_bbox_size=0),

rcnn=dict(

score_thr=0.05,

nms=dict(type='nms', iou_threshold=0.5),

max_per_img=100)),

pretrained=None)

dataset_type = 'CocoDataset'

data_root = 'data/coco/'

img_norm_cfg = dict(

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

train_pipeline = [

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels'])

]

test_pipeline = [

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]

data = dict(

samples_per_gpu=2,

workers_per_gpu=2,

train=dict(

type='CocoDataset',

ann_file='data/coco/annotations/instances_train2017.json',

img_prefix='data/coco/train2017/',

pipeline=[

dict(type='LoadImageFromFile'),

dict(type='LoadAnnotations', with_bbox=True),

dict(type='Resize', img_scale=(1333, 800), keep_ratio=True),

dict(type='RandomFlip', flip_ratio=0.5),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img', 'gt_bboxes', 'gt_labels'])

]),

val=dict(

type='CocoDataset',

ann_file='data/coco/annotations/instances_val2017.json',

img_prefix='data/coco/val2017/',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='ImageToTensor', keys=['img']),

dict(type='Collect', keys=['img'])

])

]),

test=dict(

type='CocoDataset',

ann_file='data/coco/annotations/instances_val2017.json',

img_prefix='data/coco/val2017/',

pipeline=[

dict(type='LoadImageFromFile'),

dict(

type='MultiScaleFlipAug',

img_scale=(1333, 800),

flip=False,

transforms=[

dict(type='Resize', keep_ratio=True),

dict(type='RandomFlip'),

dict(

type='Normalize',

mean=[123.675, 116.28, 103.53],

std=[58.395, 57.12, 57.375],

to_rgb=True),

dict(type='Pad', size_divisor=32),

dict(type='DefaultFormatBundle'),

dict(type='Collect', keys=['img'])

])

]))

evaluation = dict(interval=1, metric='bbox')

optimizer = dict(type='SGD', lr=0.02, momentum=0.9, weight_decay=0.0001)

optimizer_config = dict(grad_clip=None)

lr_config = dict(

policy='step',

warmup='linear',

warmup_iters=500,

warmup_ratio=0.001,

step=[8, 11])

runner = dict(type='EpochBasedRunner', max_epochs=12)

checkpoint_config = dict(interval=1)

log_config = dict(interval=50, hooks=[dict(type='TextLoggerHook')])

custom_hooks = [dict(type='NumClassCheckHook')]

dist_params = dict(backend='nccl')

log_level = 'INFO'

load_from = None

resume_from = None

workflow = [('train', 1)]커스텀 할때 모델의 class 가 몇개인가, bbox_head의 num_classes 설정, dataset 설정을 지정해야 함. transforming, annotation 등 설정

array를 inference_detector()에 입력할 경우에는 원본 array를 BGR 형태로 입력 필요(RGB 변환은 내부에서 수행하므로 BGR로 입력 필요)

import cv2

# RGB가 아닌 BGR로 입력

img_arr = cv2.imread('/content/mmdetection/demo/demo.jpg')

results = inference_detector(model, img_arr)

show_result_pyplot(model, img_arr, results)

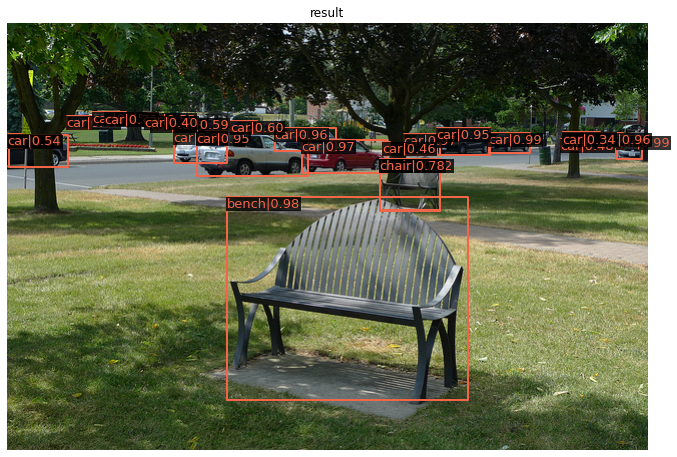

show_result_pyplot()을 이용하지 않고, inference 결과를 image로 표현하기

- model과 image array를 입력하면 해당 image를 detect하고 bbox 처리해주는 get_detected_img() 함수 생성.

- COCO 클래스 매핑은 0 부터 순차적으로 적용됨.

- results에 들어있는 array 값이 없는 경우는 해당 list의 index에 해당하는 class id값으로 object가 Detection되지 않은 것임.

- 개별 class의 score threshold가 낮은 값은 제외.

# 0부터 순차적으로 클래스 매핑된 label 적용.

labels_to_names_seq = {0:'person',1:'bicycle',2:'car',3:'motorbike',4:'aeroplane',5:'bus',6:'train',7:'truck',8:'boat',9:'traffic light',10:'fire hydrant',

11:'stop sign',12:'parking meter',13:'bench',14:'bird',15:'cat',16:'dog',17:'horse',18:'sheep',19:'cow',20:'elephant',

21:'bear',22:'zebra',23:'giraffe',24:'backpack',25:'umbrella',26:'handbag',27:'tie',28:'suitcase',29:'frisbee',30:'skis',

31:'snowboard',32:'sports ball',33:'kite',34:'baseball bat',35:'baseball glove',36:'skateboard',37:'surfboard',38:'tennis racket',39:'bottle',40:'wine glass',

41:'cup',42:'fork',43:'knife',44:'spoon',45:'bowl',46:'banana',47:'apple',48:'sandwich',49:'orange',50:'broccoli',

51:'carrot',52:'hot dog',53:'pizza',54:'donut',55:'cake',56:'chair',57:'sofa',58:'pottedplant',59:'bed',60:'diningtable',

61:'toilet',62:'tvmonitor',63:'laptop',64:'mouse',65:'remote',66:'keyboard',67:'cell phone',68:'microwave',69:'oven',70:'toaster',

71:'sink',72:'refrigerator',73:'book',74:'clock',75:'vase',76:'scissors',77:'teddy bear',78:'hair drier',79:'toothbrush' }

labels_to_names = {1:'person',2:'bicycle',3:'car',4:'motorcycle',5:'airplane',6:'bus',7:'train',8:'truck',9:'boat',10:'traffic light',

11:'fire hydrant',12:'street sign',13:'stop sign',14:'parking meter',15:'bench',16:'bird',17:'cat',18:'dog',19:'horse',20:'sheep',

21:'cow',22:'elephant',23:'bear',24:'zebra',25:'giraffe',26:'hat',27:'backpack',28:'umbrella',29:'shoe',30:'eye glasses',

31:'handbag',32:'tie',33:'suitcase',34:'frisbee',35:'skis',36:'snowboard',37:'sports ball',38:'kite',39:'baseball bat',40:'baseball glove',

41:'skateboard',42:'surfboard',43:'tennis racket',44:'bottle',45:'plate',46:'wine glass',47:'cup',48:'fork',49:'knife',50:'spoon',

51:'bowl',52:'banana',53:'apple',54:'sandwich',55:'orange',56:'broccoli',57:'carrot',58:'hot dog',59:'pizza',60:'donut',

61:'cake',62:'chair',63:'couch',64:'potted plant',65:'bed',66:'mirror',67:'dining table',68:'window',69:'desk',70:'toilet',

71:'door',72:'tv',73:'laptop',74:'mouse',75:'remote',76:'keyboard',77:'cell phone',78:'microwave',79:'oven',80:'toaster',

81:'sink',82:'refrigerator',83:'blender',84:'book',85:'clock',86:'vase',87:'scissors',88:'teddy bear',89:'hair drier',90:'toothbrush',

91:'hair brush'}import numpy as np

# np.where 사용법 예시.

arr1 = np.array([[3.75348572e+02, 1.19171005e+02, 3.81950867e+02, 1.34460617e+02,

1.35454759e-01],

[5.32362000e+02, 1.09554726e+02, 5.40526550e+02, 1.25222633e+02,

8.88786465e-01],

[3.61124298e+02, 1.09049202e+02, 3.68625610e+02, 1.22483063e+02,

7.20717013e-02]], dtype=np.float32)

print(arr1.shape)

arr1_filtered = arr1[np.where(arr1[:, 4] > 0.1)] # 0.1 보다 큰것만 저장

print('### arr1_filtered:', arr1_filtered, arr1_filtered.shape)

(3, 5) # 2차원으로

### arr1_filtered: [[3.75348572e+02 1.19171005e+02 3.81950867e+02 1.34460617e+02

1.35454759e-01]

[5.32362000e+02 1.09554726e+02 5.40526550e+02 1.25222633e+02

8.88786495e-01]] (2, 5)np.where(arr1[:, 4] > 0.1) # row는 전부, 4:class confidence 가 0.1보다 큰 경우

# (array([0, 1]),)

# row 기준으로# model과 원본 이미지 array, filtering할 기준 class confidence score를 인자로 가지는 inference 시각화용 함수 생성.

def get_detected_img(model, img_array, score_threshold=0.3, is_print=True):

# 인자로 들어온 image_array를 복사.

draw_img = img_array.copy()

bbox_color=(0, 255, 0)

text_color=(0, 0, 255)

# model과 image array를 입력 인자로 inference detection 수행하고 결과를 results로 받음.

# results는 80개의 2차원 array(shape=(오브젝트갯수, 5))를 가지는 list.

results = inference_detector(model, img_array)

# 80개의 array원소를 가지는 results 리스트를 loop를 돌면서 개별 2차원 array들을 추출하고 이를 기반으로 이미지 시각화

# results 리스트의 위치 index가 바로 COCO 매핑된 Class id. 여기서는 result_ind가 class id

# 개별 2차원 array에 오브젝트별 좌표와 class confidence score 값을 가짐.

for result_ind, result in enumerate(results):

# 개별 2차원 array의 row size가 0 이면 해당 Class id로 값이 없으므로 다음 loop로 진행.

if len(result) == 0:

continue

# 2차원 array에서 5번째 컬럼에 해당하는 값이 score threshold이며 이 값이 함수 인자로 들어온 score_threshold 보다 낮은 경우는 제외.

result_filtered = result[np.where(result[:, 4] > score_threshold)]

# 해당 클래스 별로 Detect된 여러개의 오브젝트 정보가 2차원 array에 담겨 있으며, 이 2차원 array를 row수만큼 iteration해서 개별 오브젝트의 좌표값 추출.

for i in range(len(result_filtered)):

# 좌상단, 우하단 좌표 추출.

left = int(result_filtered[i, 0])

top = int(result_filtered[i, 1])

right = int(result_filtered[i, 2])

bottom = int(result_filtered[i, 3])

caption = "{}: {:.4f}".format(labels_to_names_seq[result_ind], result_filtered[i, 4])

cv2.rectangle(draw_img, (left, top), (right, bottom), color=bbox_color, thickness=2)

cv2.putText(draw_img, caption, (int(left), int(top - 7)), cv2.FONT_HERSHEY_SIMPLEX, 0.37, text_color, 1)

if is_print:

print(caption)

return draw_imgimport matplotlib.pyplot as plt

img_arr = cv2.imread('/content/mmdetection/demo/demo.jpg')

detected_img = get_detected_img(model, img_arr, score_threshold=0.3, is_print=True)

# detect 입력된 이미지는 bgr임. 이를 최종 출력시 rgb로 변환

detected_img = cv2.cvtColor(detected_img, cv2.COLOR_BGR2RGB)

plt.figure(figsize=(12, 12))

plt.imshow(detected_img)

/usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/anchor_generator.py:324: UserWarning: ``grid_anchors`` would be deprecated soon. Please use ``grid_priors``

warnings.warn('``grid_anchors`` would be deprecated soon. '

car: 0.9888

car: 0.9872

car: 0.9832

car: 0.9713

car: 0.9678

car: 0.9594

car: 0.9593

car: 0.9568

car: 0.9510

car: 0.9458

car: 0.9440

car: 0.9331

car: 0.8663

car: 0.8268

car: 0.7535

car: 0.7166

car: 0.6008

car: 0.5920

car: 0.5540

car: 0.5435

car: 0.4768

car: 0.4612

car: 0.4010

car: 0.3439

bench: 0.9778

bench: 0.4170

chair: 0.7779

/usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/anchor_generator.py:361: UserWarning: ``single_level_grid_anchors`` would be deprecated soon. Please use ``single_level_grid_priors``

'``single_level_grid_anchors`` would be deprecated soon. '

<matplotlib.image.AxesImage at 0x7f7f78bc4a50>

!mkdir data!wget -O /content/data/beatles01.jpg https://raw.githubusercontent.com/chulminkw/DLCV/master/data/image/beatles01.jpg

!ls -lia /content/data/beatles01.jpgimg_arr = cv2.imread('/content/data/beatles01.jpg')

detected_img = get_detected_img(model, img_arr, score_threshold=0.5, is_print=True)

# detect 입력된 이미지는 bgr임. 이를 최종 출력시 rgb로 변환

detected_img = cv2.cvtColor(detected_img, cv2.COLOR_BGR2RGB)

plt.figure(figsize=(12, 12))

plt.imshow(detected_img)

/usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/anchor_generator.py:324: UserWarning: ``grid_anchors`` would be deprecated soon. Please use ``grid_priors``

warnings.warn('``grid_anchors`` would be deprecated soon. '

person: 0.9988

person: 0.9982

person: 0.9980

person: 0.9971

person: 0.9604

car: 0.9693

car: 0.9686

car: 0.9648

car: 0.9517

car: 0.9254

car: 0.9030

car: 0.8312

car: 0.8008

car: 0.7331

car: 0.6208

tie: 0.5924

/usr/local/lib/python3.7/dist-packages/mmdet-2.17.0-py3.7.egg/mmdet/core/anchor/anchor_generator.py:361: UserWarning: ``single_level_grid_anchors`` would be deprecated soon. Please use ``single_level_grid_priors``

'``single_level_grid_anchors`` would be deprecated soon. '

<matplotlib.image.AxesImage at 0x7f7f78bf2e50>

반응형

'Computer_Science > Computer Vision Guide' 카테고리의 다른 글

| 4-7. tiny kitti - dataset (0) | 2021.10.11 |

|---|---|

| 4-6. faster-rcnn pretrained model로 video inference 실행 (0) | 2021.10.10 |

| 4-1~2. pytorch 기반 주요 object detection / segmentation 패키지 (0) | 2021.09.28 |

| 3-13. 모던 object Detection 모델 아키텍처 (0) | 2021.09.27 |

| 3-12. Video inference (0) | 2021.09.27 |