Image-to-Image Translation with Conditional Adversarial Networks

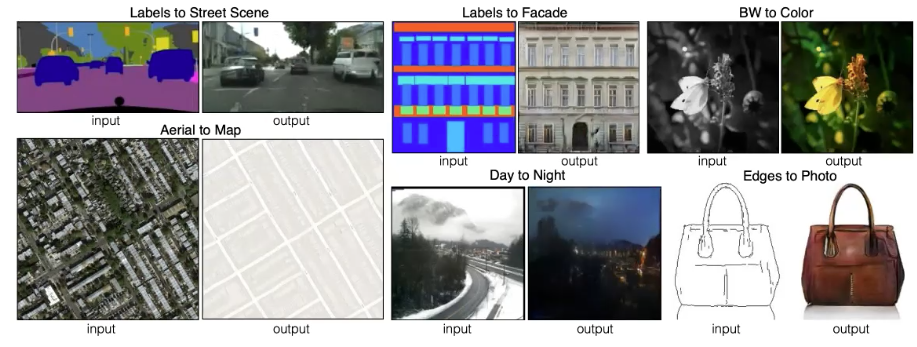

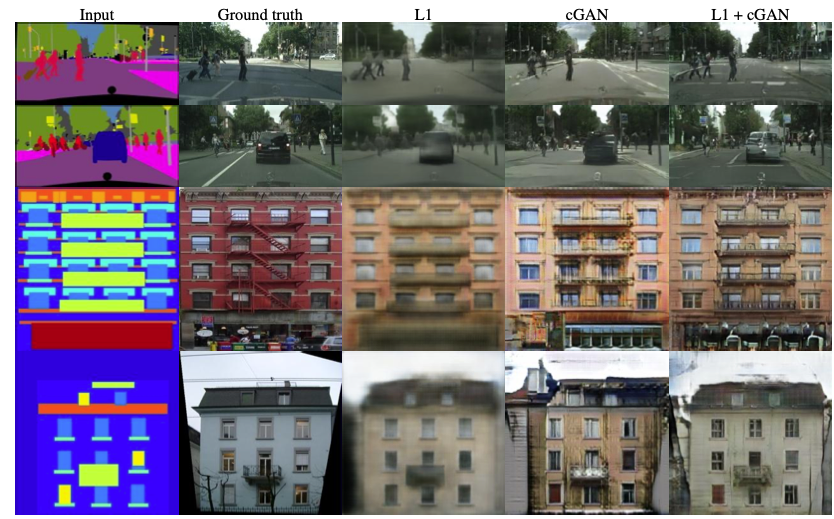

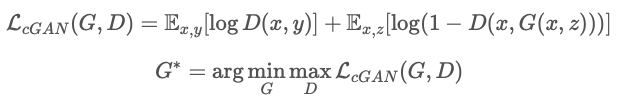

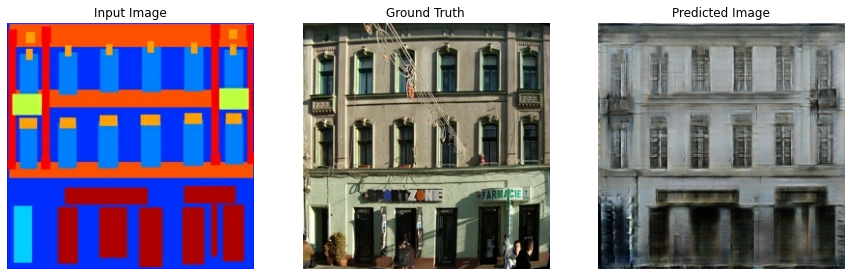

Conditional GAN

GAN에서 조건을 부여하면 이미지를 생성시키는 모델

이미지를 변환할 때 생성 모델과 판별 모델에 조건으로 이미지를 넣어 학습하면

생성 모델은 입력 이미지에 따르는 결과 이미지를 생성할 수 있게 된다

흑백 이미지를 컬러와 하거나, 윤곽이 주어지면 물체를 그리는 문제와 같은 것들을 해결 할 수 있다

픽셀 하나 하나를 바꾼다

Generator

이미지의 맥락을 보존시키는데 효과적인 구조이다

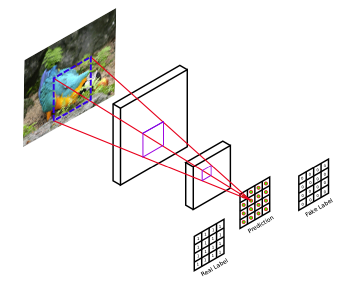

Discriminator

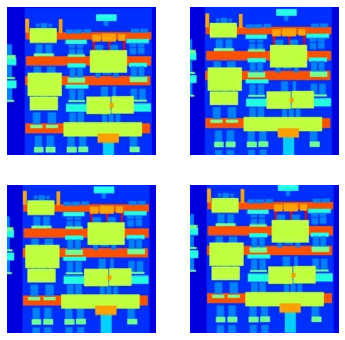

PatchGAN은 이미지를 작은 patch들로 쪼개 그 patch에 대해 fake/real를 판단한다

이렇게 함으로써 L1 loss가 만들어낸 전체적인 특징과 더불어 좀 더 디테일한 특징들을 이미지에서 살려낼 수 있다

부가적 이점으로는 전체 이미지보다 작은 크기에 적용하기 때문에 파라미터 수가 적고 좀 더 빠른 속도로 연산이 가능하다

또한 더 큰 사이즈의 이미지에 대해 일반화 하여 적용시킬 수 있는 장점이 있다

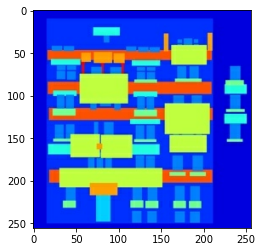

PatchGAN discriminator

원본 이미지에 70x70만큼 움직이면서 window연산을 하고 연산 결과 30x30 크기 이미지가 나온다

이때 30x30 크기 이미지를 통해 각각의 부분을 예측한다

convolution은 특징이 있는지 없는지 여부를 찾고 주변 데이터와의 상관관계를 따지지 않기 때문에

전체 이미지를 통해 예측을 하게 되면 정확한 예측이 불가능하다

따라서 부분 부분의 이미지를 통해 예측을 하는 방식을 사용해야 한다

import tensorflow as tf

resnet = tf.keras.applications.ResNet152V2()resnet.summary() # Add를 사용해서 결과를 유지했다

Model: "resnet152v2"

__________________________________________________________________________________________________

Layer (type) Output Shape Param # Connected to

==================================================================================================

input_2 (InputLayer) [(None, 224, 224, 3) 0

__________________________________________________________________________________________________

conv1_pad (ZeroPadding2D) (None, 230, 230, 3) 0 input_2[0][0]

__________________________________________________________________________________________________

conv1_conv (Conv2D) (None, 112, 112, 64) 9472 conv1_pad[0][0]

__________________________________________________________________________________________________

pool1_pad (ZeroPadding2D) (None, 114, 114, 64) 0 conv1_conv[0][0]

__________________________________________________________________________________________________

pool1_pool (MaxPooling2D) (None, 56, 56, 64) 0 pool1_pad[0][0]

__________________________________________________________________________________________________

conv2_block1_preact_bn (BatchNo (None, 56, 56, 64) 256 pool1_pool[0][0]

__________________________________________________________________________________________________

conv2_block1_preact_relu (Activ (None, 56, 56, 64) 0 conv2_block1_preact_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_1_conv (Conv2D) (None, 56, 56, 64) 4096 conv2_block1_preact_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_1_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_1_relu (Activation (None, 56, 56, 64) 0 conv2_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_2_pad (ZeroPadding (None, 58, 58, 64) 0 conv2_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_2_conv (Conv2D) (None, 56, 56, 64) 36864 conv2_block1_2_pad[0][0]

__________________________________________________________________________________________________

conv2_block1_2_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block1_2_relu (Activation (None, 56, 56, 64) 0 conv2_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block1_0_conv (Conv2D) (None, 56, 56, 256) 16640 conv2_block1_preact_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_3_conv (Conv2D) (None, 56, 56, 256) 16640 conv2_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block1_out (Add) (None, 56, 56, 256) 0 conv2_block1_0_conv[0][0]

conv2_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_preact_bn (BatchNo (None, 56, 56, 256) 1024 conv2_block1_out[0][0]

__________________________________________________________________________________________________

conv2_block2_preact_relu (Activ (None, 56, 56, 256) 0 conv2_block2_preact_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_1_conv (Conv2D) (None, 56, 56, 64) 16384 conv2_block2_preact_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_1_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_1_relu (Activation (None, 56, 56, 64) 0 conv2_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_2_pad (ZeroPadding (None, 58, 58, 64) 0 conv2_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_2_conv (Conv2D) (None, 56, 56, 64) 36864 conv2_block2_2_pad[0][0]

__________________________________________________________________________________________________

conv2_block2_2_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block2_2_relu (Activation (None, 56, 56, 64) 0 conv2_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv2_block2_3_conv (Conv2D) (None, 56, 56, 256) 16640 conv2_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block2_out (Add) (None, 56, 56, 256) 0 conv2_block1_out[0][0]

conv2_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_preact_bn (BatchNo (None, 56, 56, 256) 1024 conv2_block2_out[0][0]

__________________________________________________________________________________________________

conv2_block3_preact_relu (Activ (None, 56, 56, 256) 0 conv2_block3_preact_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_1_conv (Conv2D) (None, 56, 56, 64) 16384 conv2_block3_preact_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_1_bn (BatchNormali (None, 56, 56, 64) 256 conv2_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_1_relu (Activation (None, 56, 56, 64) 0 conv2_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv2_block3_2_pad (ZeroPadding (None, 58, 58, 64) 0 conv2_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_2_conv (Conv2D) (None, 28, 28, 64) 36864 conv2_block3_2_pad[0][0]

__________________________________________________________________________________________________

conv2_block3_2_bn (BatchNormali (None, 28, 28, 64) 256 conv2_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv2_block3_2_relu (Activation (None, 28, 28, 64) 0 conv2_block3_2_bn[0][0]

__________________________________________________________________________________________________

max_pooling2d_3 (MaxPooling2D) (None, 28, 28, 256) 0 conv2_block2_out[0][0]

__________________________________________________________________________________________________

conv2_block3_3_conv (Conv2D) (None, 28, 28, 256) 16640 conv2_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv2_block3_out (Add) (None, 28, 28, 256) 0 max_pooling2d_3[0][0]

conv2_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_preact_bn (BatchNo (None, 28, 28, 256) 1024 conv2_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block1_preact_relu (Activ (None, 28, 28, 256) 0 conv3_block1_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_1_conv (Conv2D) (None, 28, 28, 128) 32768 conv3_block1_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_1_relu (Activation (None, 28, 28, 128) 0 conv3_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_2_conv (Conv2D) (None, 28, 28, 128) 147456 conv3_block1_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block1_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block1_2_relu (Activation (None, 28, 28, 128) 0 conv3_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block1_0_conv (Conv2D) (None, 28, 28, 512) 131584 conv3_block1_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block1_out (Add) (None, 28, 28, 512) 0 conv3_block1_0_conv[0][0]

conv3_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_preact_bn (BatchNo (None, 28, 28, 512) 2048 conv3_block1_out[0][0]

__________________________________________________________________________________________________

conv3_block2_preact_relu (Activ (None, 28, 28, 512) 0 conv3_block2_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_1_conv (Conv2D) (None, 28, 28, 128) 65536 conv3_block2_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_1_relu (Activation (None, 28, 28, 128) 0 conv3_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_2_conv (Conv2D) (None, 28, 28, 128) 147456 conv3_block2_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block2_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block2_2_relu (Activation (None, 28, 28, 128) 0 conv3_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block2_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block2_out (Add) (None, 28, 28, 512) 0 conv3_block1_out[0][0]

conv3_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_preact_bn (BatchNo (None, 28, 28, 512) 2048 conv3_block2_out[0][0]

__________________________________________________________________________________________________

conv3_block3_preact_relu (Activ (None, 28, 28, 512) 0 conv3_block3_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_1_conv (Conv2D) (None, 28, 28, 128) 65536 conv3_block3_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_1_relu (Activation (None, 28, 28, 128) 0 conv3_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_2_conv (Conv2D) (None, 28, 28, 128) 147456 conv3_block3_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block3_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block3_2_relu (Activation (None, 28, 28, 128) 0 conv3_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block3_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block3_out (Add) (None, 28, 28, 512) 0 conv3_block2_out[0][0]

conv3_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_preact_bn (BatchNo (None, 28, 28, 512) 2048 conv3_block3_out[0][0]

__________________________________________________________________________________________________

conv3_block4_preact_relu (Activ (None, 28, 28, 512) 0 conv3_block4_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_1_conv (Conv2D) (None, 28, 28, 128) 65536 conv3_block4_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_1_relu (Activation (None, 28, 28, 128) 0 conv3_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_2_conv (Conv2D) (None, 28, 28, 128) 147456 conv3_block4_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block4_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block4_2_relu (Activation (None, 28, 28, 128) 0 conv3_block4_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block4_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block4_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block4_out (Add) (None, 28, 28, 512) 0 conv3_block3_out[0][0]

conv3_block4_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block5_preact_bn (BatchNo (None, 28, 28, 512) 2048 conv3_block4_out[0][0]

__________________________________________________________________________________________________

conv3_block5_preact_relu (Activ (None, 28, 28, 512) 0 conv3_block5_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block5_1_conv (Conv2D) (None, 28, 28, 128) 65536 conv3_block5_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block5_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block5_1_relu (Activation (None, 28, 28, 128) 0 conv3_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block5_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block5_2_conv (Conv2D) (None, 28, 28, 128) 147456 conv3_block5_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block5_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block5_2_relu (Activation (None, 28, 28, 128) 0 conv3_block5_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block5_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block5_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block5_out (Add) (None, 28, 28, 512) 0 conv3_block4_out[0][0]

conv3_block5_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block6_preact_bn (BatchNo (None, 28, 28, 512) 2048 conv3_block5_out[0][0]

__________________________________________________________________________________________________

conv3_block6_preact_relu (Activ (None, 28, 28, 512) 0 conv3_block6_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block6_1_conv (Conv2D) (None, 28, 28, 128) 65536 conv3_block6_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block6_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block6_1_relu (Activation (None, 28, 28, 128) 0 conv3_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block6_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block6_2_conv (Conv2D) (None, 28, 28, 128) 147456 conv3_block6_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block6_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block6_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block6_2_relu (Activation (None, 28, 28, 128) 0 conv3_block6_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block6_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block6_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block6_out (Add) (None, 28, 28, 512) 0 conv3_block5_out[0][0]

conv3_block6_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block7_preact_bn (BatchNo (None, 28, 28, 512) 2048 conv3_block6_out[0][0]

__________________________________________________________________________________________________

conv3_block7_preact_relu (Activ (None, 28, 28, 512) 0 conv3_block7_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block7_1_conv (Conv2D) (None, 28, 28, 128) 65536 conv3_block7_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block7_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block7_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block7_1_relu (Activation (None, 28, 28, 128) 0 conv3_block7_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block7_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block7_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block7_2_conv (Conv2D) (None, 28, 28, 128) 147456 conv3_block7_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block7_2_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block7_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block7_2_relu (Activation (None, 28, 28, 128) 0 conv3_block7_2_bn[0][0]

__________________________________________________________________________________________________

conv3_block7_3_conv (Conv2D) (None, 28, 28, 512) 66048 conv3_block7_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block7_out (Add) (None, 28, 28, 512) 0 conv3_block6_out[0][0]

conv3_block7_3_conv[0][0]

__________________________________________________________________________________________________

conv3_block8_preact_bn (BatchNo (None, 28, 28, 512) 2048 conv3_block7_out[0][0]

__________________________________________________________________________________________________

conv3_block8_preact_relu (Activ (None, 28, 28, 512) 0 conv3_block8_preact_bn[0][0]

__________________________________________________________________________________________________

conv3_block8_1_conv (Conv2D) (None, 28, 28, 128) 65536 conv3_block8_preact_relu[0][0]

__________________________________________________________________________________________________

conv3_block8_1_bn (BatchNormali (None, 28, 28, 128) 512 conv3_block8_1_conv[0][0]

__________________________________________________________________________________________________

conv3_block8_1_relu (Activation (None, 28, 28, 128) 0 conv3_block8_1_bn[0][0]

__________________________________________________________________________________________________

conv3_block8_2_pad (ZeroPadding (None, 30, 30, 128) 0 conv3_block8_1_relu[0][0]

__________________________________________________________________________________________________

conv3_block8_2_conv (Conv2D) (None, 14, 14, 128) 147456 conv3_block8_2_pad[0][0]

__________________________________________________________________________________________________

conv3_block8_2_bn (BatchNormali (None, 14, 14, 128) 512 conv3_block8_2_conv[0][0]

__________________________________________________________________________________________________

conv3_block8_2_relu (Activation (None, 14, 14, 128) 0 conv3_block8_2_bn[0][0]

__________________________________________________________________________________________________

max_pooling2d_4 (MaxPooling2D) (None, 14, 14, 512) 0 conv3_block7_out[0][0]

__________________________________________________________________________________________________

conv3_block8_3_conv (Conv2D) (None, 14, 14, 512) 66048 conv3_block8_2_relu[0][0]

__________________________________________________________________________________________________

conv3_block8_out (Add) (None, 14, 14, 512) 0 max_pooling2d_4[0][0]

conv3_block8_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_preact_bn (BatchNo (None, 14, 14, 512) 2048 conv3_block8_out[0][0]

__________________________________________________________________________________________________

conv4_block1_preact_relu (Activ (None, 14, 14, 512) 0 conv4_block1_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_1_conv (Conv2D) (None, 14, 14, 256) 131072 conv4_block1_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block1_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_1_relu (Activation (None, 14, 14, 256) 0 conv4_block1_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block1_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block1_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block1_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block1_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block1_2_relu (Activation (None, 14, 14, 256) 0 conv4_block1_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block1_0_conv (Conv2D) (None, 14, 14, 1024) 525312 conv4_block1_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block1_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block1_out (Add) (None, 14, 14, 1024) 0 conv4_block1_0_conv[0][0]

conv4_block1_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block1_out[0][0]

__________________________________________________________________________________________________

conv4_block2_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block2_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block2_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block2_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_1_relu (Activation (None, 14, 14, 256) 0 conv4_block2_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block2_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block2_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block2_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block2_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block2_2_relu (Activation (None, 14, 14, 256) 0 conv4_block2_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block2_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block2_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block2_out (Add) (None, 14, 14, 1024) 0 conv4_block1_out[0][0]

conv4_block2_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block2_out[0][0]

__________________________________________________________________________________________________

conv4_block3_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block3_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block3_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block3_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_1_relu (Activation (None, 14, 14, 256) 0 conv4_block3_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block3_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block3_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block3_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block3_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block3_2_relu (Activation (None, 14, 14, 256) 0 conv4_block3_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block3_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block3_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block3_out (Add) (None, 14, 14, 1024) 0 conv4_block2_out[0][0]

conv4_block3_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block3_out[0][0]

__________________________________________________________________________________________________

conv4_block4_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block4_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block4_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block4_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_1_relu (Activation (None, 14, 14, 256) 0 conv4_block4_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block4_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block4_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block4_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block4_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block4_2_relu (Activation (None, 14, 14, 256) 0 conv4_block4_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block4_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block4_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block4_out (Add) (None, 14, 14, 1024) 0 conv4_block3_out[0][0]

conv4_block4_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block4_out[0][0]

__________________________________________________________________________________________________

conv4_block5_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block5_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block5_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block5_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_1_relu (Activation (None, 14, 14, 256) 0 conv4_block5_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block5_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block5_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block5_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block5_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block5_2_relu (Activation (None, 14, 14, 256) 0 conv4_block5_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block5_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block5_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block5_out (Add) (None, 14, 14, 1024) 0 conv4_block4_out[0][0]

conv4_block5_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block5_out[0][0]

__________________________________________________________________________________________________

conv4_block6_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block6_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block6_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block6_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_1_relu (Activation (None, 14, 14, 256) 0 conv4_block6_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block6_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block6_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block6_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block6_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block6_2_relu (Activation (None, 14, 14, 256) 0 conv4_block6_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block6_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block6_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block6_out (Add) (None, 14, 14, 1024) 0 conv4_block5_out[0][0]

conv4_block6_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block7_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block6_out[0][0]

__________________________________________________________________________________________________

conv4_block7_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block7_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block7_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block7_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block7_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block7_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block7_1_relu (Activation (None, 14, 14, 256) 0 conv4_block7_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block7_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block7_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block7_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block7_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block7_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block7_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block7_2_relu (Activation (None, 14, 14, 256) 0 conv4_block7_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block7_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block7_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block7_out (Add) (None, 14, 14, 1024) 0 conv4_block6_out[0][0]

conv4_block7_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block8_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block7_out[0][0]

__________________________________________________________________________________________________

conv4_block8_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block8_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block8_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block8_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block8_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block8_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block8_1_relu (Activation (None, 14, 14, 256) 0 conv4_block8_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block8_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block8_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block8_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block8_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block8_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block8_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block8_2_relu (Activation (None, 14, 14, 256) 0 conv4_block8_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block8_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block8_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block8_out (Add) (None, 14, 14, 1024) 0 conv4_block7_out[0][0]

conv4_block8_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block9_preact_bn (BatchNo (None, 14, 14, 1024) 4096 conv4_block8_out[0][0]

__________________________________________________________________________________________________

conv4_block9_preact_relu (Activ (None, 14, 14, 1024) 0 conv4_block9_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block9_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block9_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block9_1_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block9_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block9_1_relu (Activation (None, 14, 14, 256) 0 conv4_block9_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block9_2_pad (ZeroPadding (None, 16, 16, 256) 0 conv4_block9_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block9_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block9_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block9_2_bn (BatchNormali (None, 14, 14, 256) 1024 conv4_block9_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block9_2_relu (Activation (None, 14, 14, 256) 0 conv4_block9_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block9_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block9_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block9_out (Add) (None, 14, 14, 1024) 0 conv4_block8_out[0][0]

conv4_block9_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block10_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block9_out[0][0]

__________________________________________________________________________________________________

conv4_block10_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block10_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block10_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block10_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block10_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block10_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block10_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block10_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block10_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block10_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block10_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block10_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block10_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block10_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block10_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block10_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block10_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block10_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block10_out (Add) (None, 14, 14, 1024) 0 conv4_block9_out[0][0]

conv4_block10_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block11_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block10_out[0][0]

__________________________________________________________________________________________________

conv4_block11_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block11_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block11_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block11_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block11_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block11_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block11_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block11_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block11_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block11_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block11_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block11_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block11_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block11_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block11_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block11_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block11_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block11_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block11_out (Add) (None, 14, 14, 1024) 0 conv4_block10_out[0][0]

conv4_block11_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block12_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block11_out[0][0]

__________________________________________________________________________________________________

conv4_block12_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block12_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block12_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block12_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block12_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block12_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block12_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block12_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block12_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block12_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block12_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block12_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block12_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block12_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block12_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block12_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block12_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block12_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block12_out (Add) (None, 14, 14, 1024) 0 conv4_block11_out[0][0]

conv4_block12_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block13_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block12_out[0][0]

__________________________________________________________________________________________________

conv4_block13_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block13_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block13_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block13_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block13_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block13_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block13_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block13_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block13_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block13_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block13_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block13_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block13_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block13_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block13_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block13_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block13_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block13_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block13_out (Add) (None, 14, 14, 1024) 0 conv4_block12_out[0][0]

conv4_block13_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block14_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block13_out[0][0]

__________________________________________________________________________________________________

conv4_block14_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block14_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block14_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block14_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block14_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block14_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block14_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block14_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block14_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block14_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block14_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block14_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block14_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block14_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block14_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block14_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block14_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block14_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block14_out (Add) (None, 14, 14, 1024) 0 conv4_block13_out[0][0]

conv4_block14_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block15_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block14_out[0][0]

__________________________________________________________________________________________________

conv4_block15_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block15_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block15_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block15_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block15_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block15_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block15_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block15_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block15_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block15_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block15_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block15_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block15_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block15_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block15_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block15_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block15_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block15_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block15_out (Add) (None, 14, 14, 1024) 0 conv4_block14_out[0][0]

conv4_block15_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block16_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block15_out[0][0]

__________________________________________________________________________________________________

conv4_block16_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block16_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block16_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block16_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block16_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block16_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block16_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block16_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block16_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block16_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block16_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block16_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block16_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block16_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block16_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block16_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block16_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block16_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block16_out (Add) (None, 14, 14, 1024) 0 conv4_block15_out[0][0]

conv4_block16_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block17_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block16_out[0][0]

__________________________________________________________________________________________________

conv4_block17_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block17_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block17_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block17_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block17_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block17_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block17_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block17_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block17_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block17_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block17_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block17_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block17_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block17_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block17_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block17_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block17_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block17_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block17_out (Add) (None, 14, 14, 1024) 0 conv4_block16_out[0][0]

conv4_block17_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block18_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block17_out[0][0]

__________________________________________________________________________________________________

conv4_block18_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block18_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block18_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block18_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block18_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block18_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block18_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block18_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block18_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block18_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block18_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block18_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block18_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block18_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block18_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block18_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block18_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block18_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block18_out (Add) (None, 14, 14, 1024) 0 conv4_block17_out[0][0]

conv4_block18_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block19_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block18_out[0][0]

__________________________________________________________________________________________________

conv4_block19_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block19_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block19_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block19_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block19_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block19_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block19_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block19_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block19_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block19_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block19_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block19_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block19_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block19_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block19_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block19_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block19_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block19_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block19_out (Add) (None, 14, 14, 1024) 0 conv4_block18_out[0][0]

conv4_block19_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block20_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block19_out[0][0]

__________________________________________________________________________________________________

conv4_block20_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block20_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block20_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block20_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block20_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block20_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block20_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block20_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block20_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block20_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block20_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block20_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block20_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block20_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block20_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block20_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block20_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block20_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block20_out (Add) (None, 14, 14, 1024) 0 conv4_block19_out[0][0]

conv4_block20_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block21_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block20_out[0][0]

__________________________________________________________________________________________________

conv4_block21_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block21_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block21_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block21_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block21_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block21_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block21_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block21_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block21_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block21_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block21_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block21_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block21_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block21_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block21_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block21_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block21_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block21_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block21_out (Add) (None, 14, 14, 1024) 0 conv4_block20_out[0][0]

conv4_block21_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block22_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block21_out[0][0]

__________________________________________________________________________________________________

conv4_block22_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block22_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block22_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block22_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block22_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block22_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block22_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block22_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block22_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block22_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block22_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block22_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block22_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block22_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block22_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block22_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block22_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block22_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block22_out (Add) (None, 14, 14, 1024) 0 conv4_block21_out[0][0]

conv4_block22_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block23_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block22_out[0][0]

__________________________________________________________________________________________________

conv4_block23_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block23_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block23_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block23_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block23_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block23_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block23_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block23_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block23_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block23_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block23_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block23_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block23_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block23_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block23_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block23_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block23_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block23_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block23_out (Add) (None, 14, 14, 1024) 0 conv4_block22_out[0][0]

conv4_block23_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block24_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block23_out[0][0]

__________________________________________________________________________________________________

conv4_block24_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block24_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block24_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block24_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block24_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block24_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block24_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block24_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block24_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block24_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block24_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block24_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block24_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block24_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block24_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block24_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block24_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block24_2_relu[0][0]

__________________________________________________________________________________________________

conv4_block24_out (Add) (None, 14, 14, 1024) 0 conv4_block23_out[0][0]

conv4_block24_3_conv[0][0]

__________________________________________________________________________________________________

conv4_block25_preact_bn (BatchN (None, 14, 14, 1024) 4096 conv4_block24_out[0][0]

__________________________________________________________________________________________________

conv4_block25_preact_relu (Acti (None, 14, 14, 1024) 0 conv4_block25_preact_bn[0][0]

__________________________________________________________________________________________________

conv4_block25_1_conv (Conv2D) (None, 14, 14, 256) 262144 conv4_block25_preact_relu[0][0]

__________________________________________________________________________________________________

conv4_block25_1_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block25_1_conv[0][0]

__________________________________________________________________________________________________

conv4_block25_1_relu (Activatio (None, 14, 14, 256) 0 conv4_block25_1_bn[0][0]

__________________________________________________________________________________________________

conv4_block25_2_pad (ZeroPaddin (None, 16, 16, 256) 0 conv4_block25_1_relu[0][0]

__________________________________________________________________________________________________

conv4_block25_2_conv (Conv2D) (None, 14, 14, 256) 589824 conv4_block25_2_pad[0][0]

__________________________________________________________________________________________________

conv4_block25_2_bn (BatchNormal (None, 14, 14, 256) 1024 conv4_block25_2_conv[0][0]

__________________________________________________________________________________________________

conv4_block25_2_relu (Activatio (None, 14, 14, 256) 0 conv4_block25_2_bn[0][0]

__________________________________________________________________________________________________

conv4_block25_3_conv (Conv2D) (None, 14, 14, 1024) 263168 conv4_block25_2_relu[0][0]

__________________________________________________________________________________________________