728x90

반응형

# Fashion Mnist with cnn

import tensorflow as tf

fashion_mnist = tf.keras.datasets.fashion_mnist

(train_x, train_y), (test_x, test_y) = fashion_mnist.load_data()

train_x = train_x / 255.0

test_x = test_x / 255.0

print(train_x.shape, test_x.shape) # 2차원 이미지

train_x = train_x.reshape(-1, 28, 28, 1) # 2차원 이미지 => 3차원 이미지

test_x = test_x.reshape(-1, 28, 28, 1)

print(train_x.shape, test_x.shape)

# (60000, 28, 28) (10000, 28, 28)

# (60000, 28, 28, 1) (10000, 28, 28, 1)

# 이미지로 데이터 확인

import matplotlib.pyplot as plt

plt.figure(figsize = ( 10, 10 ))

for c in range(16) :

plt.subplot(4,4,c+1)

plt.imshow(train_x[c].reshape(28, 28), cmap='gray')

plt.show()

print(train_y[:16])

# [9 0 0 3 0 2 7 2 5 5 0 9 5 5 7 9]# cnn 모델 정의

# kernel_size = 가중치 필터 3행3열

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(input_shape=(28,28,1), kernel_size=(3,3), filters=16), # 합성곱층 3개

tf.keras.layers.Conv2D(kernel_size=(3,3), filters=32),

tf.keras.layers.Conv2D(kernel_size=(3,3), filters=64),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(units=128, activation='relu'),

tf.keras.layers.Dense(units=10, activation='softmax')

])

model.compile(optimizer=tf.keras.optimizers.Adam(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.summary()

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_10 (Conv2D) (None, 26, 26, 16) 160

_________________________________________________________________

conv2d_11 (Conv2D) (None, 24, 24, 32) 4640

_________________________________________________________________

conv2d_12 (Conv2D) (None, 22, 22, 64) 18496

_________________________________________________________________

flatten_1 (Flatten) (None, 30976) 0

_________________________________________________________________

dense (Dense) (None, 128) 3965056

_________________________________________________________________

dense_1 (Dense) (None, 10) 1290

=================================================================

Total params: 3,989,642

Trainable params: 3,989,642

Non-trainable params: 0

_________________________________________________________________

history=model.fit(train_x, train_y, epochs=10, validation_split=0.25)

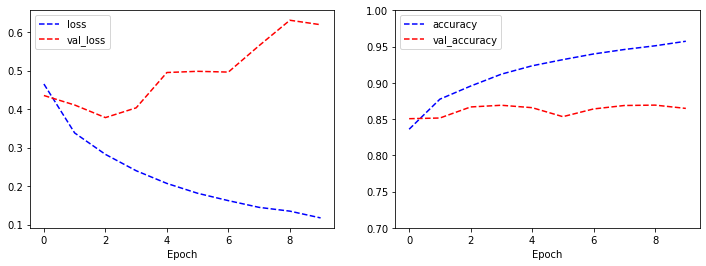

Epoch 1/10

1407/1407 [==============================] - 161s 114ms/step - loss: 0.4653 - accuracy: 0.8360 - val_loss: 0.4354 - val_accuracy: 0.8505

Epoch 2/10

1407/1407 [==============================] - 161s 114ms/step - loss: 0.3381 - accuracy: 0.8775 - val_loss: 0.4108 - val_accuracy: 0.8515

Epoch 3/10

1407/1407 [==============================] - 159s 113ms/step - loss: 0.2825 - accuracy: 0.8956 - val_loss: 0.3780 - val_accuracy: 0.8667

Epoch 4/10

1407/1407 [==============================] - 158s 112ms/step - loss: 0.2400 - accuracy: 0.9120 - val_loss: 0.4034 - val_accuracy: 0.8691

Epoch 5/10

1407/1407 [==============================] - 158s 112ms/step - loss: 0.2070 - accuracy: 0.9235 - val_loss: 0.4951 - val_accuracy: 0.8657

Epoch 6/10

1407/1407 [==============================] - 158s 112ms/step - loss: 0.1814 - accuracy: 0.9320 - val_loss: 0.4982 - val_accuracy: 0.8533

Epoch 7/10

1407/1407 [==============================] - 158s 112ms/step - loss: 0.1623 - accuracy: 0.9398 - val_loss: 0.4963 - val_accuracy: 0.8640

Epoch 8/10

1407/1407 [==============================] - 158s 112ms/step - loss: 0.1448 - accuracy: 0.9460 - val_loss: 0.5650 - val_accuracy: 0.8687

Epoch 9/10

1407/1407 [==============================] - 157s 112ms/step - loss: 0.1350 - accuracy: 0.9511 - val_loss: 0.6309 - val_accuracy: 0.8693

Epoch 10/10

1407/1407 [==============================] - 157s 112ms/step - loss: 0.1174 - accuracy: 0.9574 - val_loss: 0.6193 - val_accuracy: 0.8647

import matplotlib.pyplot as plt

plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(history.history['loss'], 'b--', label='loss')

plt.plot(history.history['val_loss'], 'r--', label='val_loss')

plt.xlabel('Epoch')

plt.legend()

plt.subplot(1,2,2)

plt.plot(history.history['accuracy'], 'b--', label='accuracy')

plt.plot(history.history['val_accuracy'], 'r--', label='val_accuracy')

plt.xlabel('Epoch')

plt.ylim(0.7, 1)

plt.legend()

plt.show()

# cnn 성능향상방법

# 1. vggnet방식 사용 : 14개까지 깊은 신경망

# 2. 이미지 보강하기 : 하나의 이미지를 여러개의 이미지로 변형

# cnn 모델 정의

# 풀링레이어, 드랍아웃레이어 추가

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(input_shape=(28,28,1), kernel_size=(3,3), filters=32), # 합성곱층 3개

tf.keras.layers.MaxPool2D(strides=(2,2)), # 특성맵의 크기가 반으로 줄어듬

tf.keras.layers.Conv2D(kernel_size=(3,3), filters=64), # 패딩이없어서 2개씩 감소

tf.keras.layers.MaxPool2D(strides=(2,2)), # 특징맵을 2행2열로나눠서 그중 최대값만 선택한 특징맵

tf.keras.layers.Conv2D(kernel_size=(3,3), filters=128), #

tf.keras.layers.Flatten(), # 9x128

tf.keras.layers.Dense(units=128, activation='relu'),

tf.keras.layers.Dropout(rate=0.3), # 일부러 누락

tf.keras.layers.Dense(units=10, activation='softmax') # 출력층

])

model.compile(optimizer=tf.keras.optimizers.Adam(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.summary()

Model: "sequential_1"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_3 (Conv2D) (None, 26, 26, 32) 320

max_pooling2d (MaxPooling2D (None, 13, 13, 32) 0

)

conv2d_4 (Conv2D) (None, 11, 11, 64) 18496

max_pooling2d_1 (MaxPooling (None, 5, 5, 64) 0

2D)

conv2d_5 (Conv2D) (None, 3, 3, 128) 73856

flatten_1 (Flatten) (None, 1152) 0

dense_2 (Dense) (None, 128) 147584

dropout (Dropout) (None, 128) 0

dense_3 (Dense) (None, 10) 1290

=================================================================

Total params: 241,546

Trainable params: 241,546

Non-trainable params: 0

_________________________________________________________________

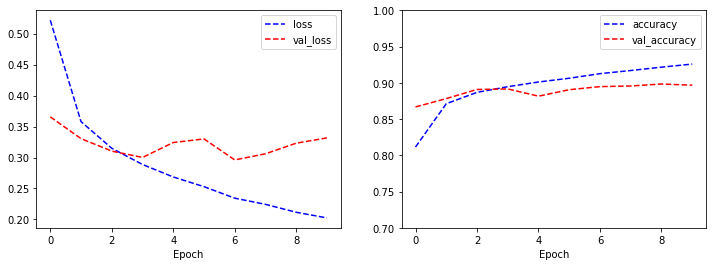

history=model.fit(train_x, train_y, epochs=10, validation_split=0.25)

Epoch 1/10

1407/1407 [==============================] - 48s 34ms/step - loss: 0.5216 - accuracy: 0.8115 - val_loss: 0.3656 - val_accuracy: 0.8667

Epoch 2/10

1407/1407 [==============================] - 48s 34ms/step - loss: 0.3579 - accuracy: 0.8712 - val_loss: 0.3305 - val_accuracy: 0.8783

Epoch 3/10

1407/1407 [==============================] - 48s 34ms/step - loss: 0.3150 - accuracy: 0.8869 - val_loss: 0.3104 - val_accuracy: 0.8909

Epoch 4/10

1407/1407 [==============================] - 47s 34ms/step - loss: 0.2887 - accuracy: 0.8947 - val_loss: 0.3002 - val_accuracy: 0.8916

Epoch 5/10

1407/1407 [==============================] - 47s 34ms/step - loss: 0.2686 - accuracy: 0.9012 - val_loss: 0.3242 - val_accuracy: 0.8816

Epoch 6/10

1407/1407 [==============================] - 47s 34ms/step - loss: 0.2530 - accuracy: 0.9064 - val_loss: 0.3300 - val_accuracy: 0.8905

Epoch 7/10

1407/1407 [==============================] - 47s 33ms/step - loss: 0.2344 - accuracy: 0.9127 - val_loss: 0.2962 - val_accuracy: 0.8948

Epoch 8/10

1407/1407 [==============================] - 47s 33ms/step - loss: 0.2244 - accuracy: 0.9170 - val_loss: 0.3060 - val_accuracy: 0.8957

Epoch 9/10

1407/1407 [==============================] - 47s 33ms/step - loss: 0.2116 - accuracy: 0.9216 - val_loss: 0.3231 - val_accuracy: 0.8984

Epoch 10/10

1407/1407 [==============================] - 47s 33ms/step - loss: 0.2025 - accuracy: 0.9259 - val_loss: 0.3317 - val_accuracy: 0.8967

import matplotlib.pyplot as plt

plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(history.history['loss'], 'b--', label='loss')

plt.plot(history.history['val_loss'], 'r--', label='val_loss')

plt.xlabel('Epoch')

plt.legend()

plt.subplot(1,2,2)

plt.plot(history.history['accuracy'], 'b--', label='accuracy')

plt.plot(history.history['val_accuracy'], 'r--', label='val_accuracy')

plt.xlabel('Epoch')

plt.ylim(0.7, 1)

plt.legend()

plt.show()

model.evaluate(test_x, test_y, verbose=0)

# [0.34508538246154785, 0.89410001039505]

model = tf.keras.Sequential([

tf.keras.layers.Conv2D(input_shape=(28,28,1), kernel_size=(3,3), filters=32,

padding='same', activation='relu'), # padding=same이라서 28 안줄어즘

tf.keras.layers.Conv2D(kernel_size=(3,3), filters=64, padding='same', activation='relu'),

tf.keras.layers.MaxPool2D(pool_size=(2,2)),

tf.keras.layers.Dropout(rate=0.5),

tf.keras.layers.Conv2D(kernel_size=(3,3), filters=128, padding='same', activation='relu'),

tf.keras.layers.Conv2D(kernel_size=(3,3), filters=256, padding='valid', activation='relu'),

tf.keras.layers.MaxPool2D(strides=(2,2)),

tf.keras.layers.Dropout(rate=0.5),

tf.keras.layers.Flatten(),

tf.keras.layers.Dense(units=512, activation='relu'),

tf.keras.layers.Dropout(rate=0.5), # 일부러 누락

tf.keras.layers.Dense(units=256, activation='relu'),

tf.keras.layers.Dropout(rate=0.5), # 일부러 누락

tf.keras.layers.Dense(units=10, activation='softmax') # 출력층

])

model.compile(optimizer=tf.keras.optimizers.Adam(),

loss='sparse_categorical_crossentropy',

metrics=['accuracy'])

model.summary()

Model: "sequential_2"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d_6 (Conv2D) (None, 28, 28, 32) 320

conv2d_7 (Conv2D) (None, 28, 28, 64) 18496

max_pooling2d_2 (MaxPooling (None, 14, 14, 64) 0

2D)

dropout_1 (Dropout) (None, 14, 14, 64) 0

conv2d_8 (Conv2D) (None, 14, 14, 128) 73856

conv2d_9 (Conv2D) (None, 12, 12, 256) 295168

max_pooling2d_3 (MaxPooling (None, 6, 6, 256) 0

2D)

dropout_2 (Dropout) (None, 6, 6, 256) 0

flatten_2 (Flatten) (None, 9216) 0

dense_4 (Dense) (None, 512) 4719104

dropout_3 (Dropout) (None, 512) 0

dense_5 (Dense) (None, 256) 131328

dropout_4 (Dropout) (None, 256) 0

dense_6 (Dense) (None, 10) 2570

=================================================================

Total params: 5,240,842

Trainable params: 5,240,842

Non-trainable params: 0

_________________________________________________________________

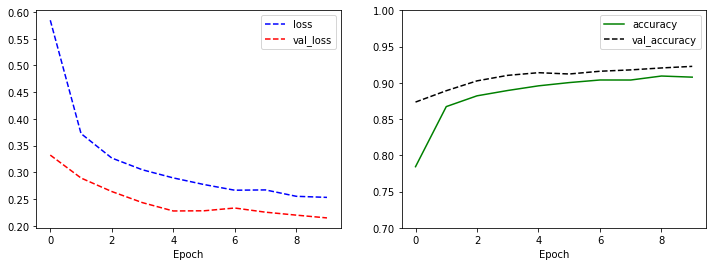

history=model.fit(train_x, train_y, epochs=10, validation_split=0.25)

Epoch 1/10

1407/1407 [==============================] - 543s 386ms/step - loss: 0.5847 - accuracy: 0.7843 - val_loss: 0.3323 - val_accuracy: 0.8735

Epoch 2/10

1407/1407 [==============================] - 540s 384ms/step - loss: 0.3726 - accuracy: 0.8672 - val_loss: 0.2894 - val_accuracy: 0.8891

Epoch 3/10

1407/1407 [==============================] - 540s 384ms/step - loss: 0.3268 - accuracy: 0.8821 - val_loss: 0.2642 - val_accuracy: 0.9027

Epoch 4/10

1407/1407 [==============================] - 542s 386ms/step - loss: 0.3048 - accuracy: 0.8894 - val_loss: 0.2433 - val_accuracy: 0.9103

Epoch 5/10

1407/1407 [==============================] - 541s 385ms/step - loss: 0.2897 - accuracy: 0.8959 - val_loss: 0.2278 - val_accuracy: 0.9140

Epoch 6/10

1407/1407 [==============================] - 543s 386ms/step - loss: 0.2773 - accuracy: 0.9004 - val_loss: 0.2281 - val_accuracy: 0.9122

Epoch 7/10

1407/1407 [==============================] - 543s 386ms/step - loss: 0.2666 - accuracy: 0.9039 - val_loss: 0.2333 - val_accuracy: 0.9160

Epoch 8/10

1407/1407 [==============================] - 548s 389ms/step - loss: 0.2672 - accuracy: 0.9039 - val_loss: 0.2255 - val_accuracy: 0.9179

Epoch 9/10

1407/1407 [==============================] - 553s 393ms/step - loss: 0.2552 - accuracy: 0.9094 - val_loss: 0.2200 - val_accuracy: 0.9205

Epoch 10/10

1407/1407 [==============================] - 554s 394ms/step - loss: 0.2532 - accuracy: 0.9078 - val_loss: 0.2148 - val_accuracy: 0.9227

import matplotlib.pyplot as plt

plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(history.history['loss'], 'b--', label='loss')

plt.plot(history.history['val_loss'], 'r--', label='val_loss')

plt.xlabel('Epoch')

plt.legend()

plt.subplot(1,2,2)

plt.plot(history.history['accuracy'], 'g-', label='accuracy')

plt.plot(history.history['val_accuracy'], 'k--', label='val_accuracy')

plt.xlabel('Epoch')

plt.ylim(0.7, 1)

plt.legend()

plt.show()

model.evaluate(test_x, test_y, verbose=0)

# [0.23406749963760376, 0.9172999858856201]

반응형

'Data_Science > Data_Analysis_Py' 카테고리의 다른 글

| 50. ImageDataGenerator (0) | 2021.12.07 |

|---|---|

| 49. cifar10 || convolution (0) | 2021.11.26 |

| 47. Cat image, convolution 설명 (0) | 2021.11.25 |

| 46. 와인품종 예측 딥러닝 이항분류 || (0) | 2021.11.25 |

| 45. 보스턴주택가격 딥러닝 회귀 예측 평가 (0) | 2021.11.25 |