728x90

반응형

import tensorflow as tf

tf.keras.__version__

# 2.7.0

import numpy as np

from tensorflow.keras.datasets import cifar10

(x_train, y_train), (x_test, y_test) = cifar10.load_data()

print(x_train.shape, y_train.shape)

print(x_test.shape, y_test.shape)

Downloading data from https://www.cs.toronto.edu/~kriz/cifar-10-python.tar.gz

170500096/170498071 [==============================] - 3s 0us/step

170508288/170498071 [==============================] - 3s 0us/step

(50000, 32, 32, 3) (50000, 1)

(10000, 32, 32, 3) (10000, 1)

x_mean = np.mean(x_train, axis = (0, 1, 2))

x_std = np.std(x_train, axis = (0, 1, 2))

x_train = (x_train - x_mean) / x_std

x_test = (x_test - x_mean) / x_std

from sklearn.model_selection import train_test_split

x_train, x_val, y_train, y_val = train_test_split(x_train, y_train, test_size = 0.3, random_state = 777)

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D, MaxPool2D, Dense, Flatten, Dropout

from tensorflow.keras.optimizers import Adam

model = Sequential()

model.add(Conv2D(kernel_size=3, filters=32, padding='same', activation='relu', input_shape=(32,32,3)))

model.add(Conv2D(kernel_size=3, filters=32, padding='same', activation='relu'))

model.add(MaxPool2D(pool_size=(2, 2), strides=2, padding = 'same'))

model.add(Dropout(rate=0.2))

model.add(Conv2D(kernel_size=3, filters=64, padding='same', activation='relu'))

model.add(Conv2D(kernel_size=3, filters=64, padding='same', activation='relu'))

model.add(MaxPool2D(pool_size=(2, 2), strides=2, padding = 'same'))

model.add(Dropout(rate=0.2))

model.add(Conv2D(kernel_size=3, filters=128, padding='same', activation='relu'))

model.add(Conv2D(kernel_size=3, filters=128, padding='same', activation='relu'))

model.add(MaxPool2D(pool_size=(2, 2), strides=2, padding = 'same'))

model.add(Dropout(rate=0.2))

model.add(Flatten())

model.add(Dense(256, activation='relu'))

model.add(Dense(10, activation='softmax'))

# 1e-4 : 0.00001

model.compile(optimizer=Adam(1e-4), loss = 'sparse_categorical_crossentropy', metrics = ['accuracy'])

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 32, 32, 32) 896

conv2d_1 (Conv2D) (None, 32, 32, 32) 9248

max_pooling2d (MaxPooling2D (None, 16, 16, 32) 0

)

dropout (Dropout) (None, 16, 16, 32) 0

conv2d_2 (Conv2D) (None, 16, 16, 64) 18496

conv2d_3 (Conv2D) (None, 16, 16, 64) 36928

max_pooling2d_1 (MaxPooling (None, 8, 8, 64) 0

2D)

dropout_1 (Dropout) (None, 8, 8, 64) 0

conv2d_4 (Conv2D) (None, 8, 8, 128) 73856

conv2d_5 (Conv2D) (None, 8, 8, 128) 147584

max_pooling2d_2 (MaxPooling (None, 4, 4, 128) 0

2D)

dropout_2 (Dropout) (None, 4, 4, 128) 0

flatten (Flatten) (None, 2048) 0

dense (Dense) (None, 256) 524544

dense_1 (Dense) (None, 10) 2570

=================================================================

Total params: 814,122

Trainable params: 814,122

Non-trainable params: 0

_________________________________________________________________

history = model.fit(x_train, y_train, epochs = 30, batch_size=32, validation_data=(x_val, y_val))

Epoch 2/30

1094/1094 [==============================] - 18s 17ms/step - loss: 1.3787 - accuracy: 0.5038 - val_loss: 1.2544 - val_accuracy: 0.5494

Epoch 3/30

1094/1094 [==============================] - 18s 17ms/step - loss: 1.2222 - accuracy: 0.5633 - val_loss: 1.1116 - val_accuracy: 0.6081

Epoch 4/30

1094/1094 [==============================] - 18s 17ms/step - loss: 1.0968 - accuracy: 0.6141 - val_loss: 1.0004 - val_accuracy: 0.6431

Epoch 5/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.9990 - accuracy: 0.6471 - val_loss: 0.9361 - val_accuracy: 0.6729

Epoch 6/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.9249 - accuracy: 0.6755 - val_loss: 0.8729 - val_accuracy: 0.6933

Epoch 7/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.8679 - accuracy: 0.6976 - val_loss: 0.8966 - val_accuracy: 0.6892

Epoch 8/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.8129 - accuracy: 0.7137 - val_loss: 0.8020 - val_accuracy: 0.7199

Epoch 9/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.7627 - accuracy: 0.7346 - val_loss: 0.8088 - val_accuracy: 0.7163

Epoch 10/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.7174 - accuracy: 0.7475 - val_loss: 0.7383 - val_accuracy: 0.7461

Epoch 11/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.6758 - accuracy: 0.7631 - val_loss: 0.7459 - val_accuracy: 0.7385

Epoch 12/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.6344 - accuracy: 0.7775 - val_loss: 0.7039 - val_accuracy: 0.7598

Epoch 13/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.6049 - accuracy: 0.7873 - val_loss: 0.6931 - val_accuracy: 0.7604

Epoch 14/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.5688 - accuracy: 0.8027 - val_loss: 0.6745 - val_accuracy: 0.7714

Epoch 15/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.5286 - accuracy: 0.8126 - val_loss: 0.6714 - val_accuracy: 0.7748

Epoch 16/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.5004 - accuracy: 0.8252 - val_loss: 0.6840 - val_accuracy: 0.7696

Epoch 17/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.4699 - accuracy: 0.8360 - val_loss: 0.6799 - val_accuracy: 0.7753

Epoch 18/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.4400 - accuracy: 0.8460 - val_loss: 0.7541 - val_accuracy: 0.7537

Epoch 19/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.4134 - accuracy: 0.8568 - val_loss: 0.6927 - val_accuracy: 0.7743

Epoch 20/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.3886 - accuracy: 0.8631 - val_loss: 0.6667 - val_accuracy: 0.7825

Epoch 21/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.3647 - accuracy: 0.8710 - val_loss: 0.6785 - val_accuracy: 0.7802

Epoch 22/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.3439 - accuracy: 0.8773 - val_loss: 0.7082 - val_accuracy: 0.7773

Epoch 23/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.3129 - accuracy: 0.8900 - val_loss: 0.6903 - val_accuracy: 0.7829

Epoch 24/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.2956 - accuracy: 0.8934 - val_loss: 0.7203 - val_accuracy: 0.7827

Epoch 25/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.2700 - accuracy: 0.9021 - val_loss: 0.7522 - val_accuracy: 0.7779

Epoch 26/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.2616 - accuracy: 0.9074 - val_loss: 0.7496 - val_accuracy: 0.7777

Epoch 27/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.2394 - accuracy: 0.9151 - val_loss: 0.7958 - val_accuracy: 0.7715

Epoch 28/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.2239 - accuracy: 0.9187 - val_loss: 0.7575 - val_accuracy: 0.7849

Epoch 29/30

1094/1094 [==============================] - 18s 17ms/step - loss: 0.2073 - accuracy: 0.9272 - val_loss: 0.8220 - val_accuracy: 0.7763

Epoch 30/30

1094/1094 [==============================] - 19s 17ms/step - loss: 0.1895 - accuracy: 0.9335 - val_loss: 0.8184 - val_accuracy: 0.7779

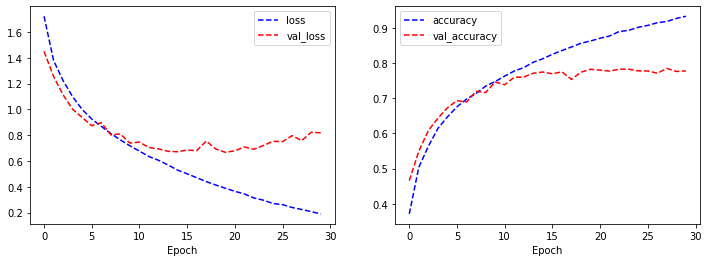

import matplotlib.pyplot as plt

plt.figure(figsize=(12,4))

plt.subplot(1,2,1)

plt.plot(history.history['loss'], 'b--', label='loss')

plt.plot(history.history['val_loss'], 'r--', label='val_loss')

plt.xlabel('Epoch')

plt.legend()

plt.subplot(1,2,2)

plt.plot(history.history['accuracy'], 'b--', label='accuracy')

plt.plot(history.history['val_accuracy'], 'r--', label='val_accuracy')

plt.xlabel('Epoch')

plt.legend()

plt.show()

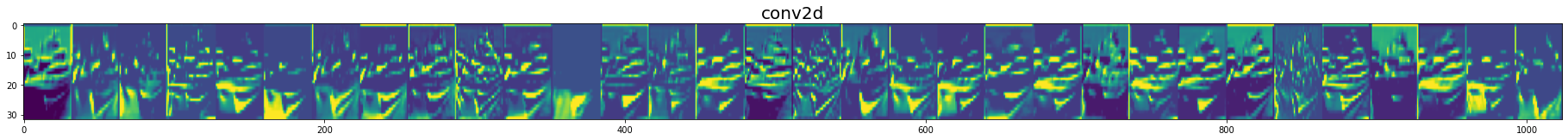

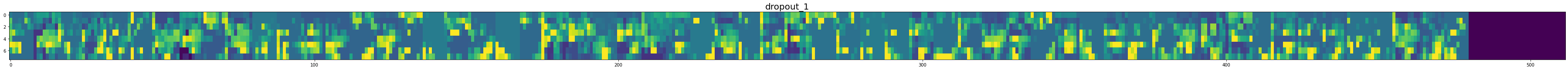

import tensorflow as tf

get_layer_name = [layer.name for layer in model.layers] # 각 층의 이름

get_output = [layer.output for layer in model.layers]

# 모델 전체에서 output을 가져올 수 있다.

visual_model = tf.keras.models.Model(inputs = model.input, outputs = get_output)

# 테스트셋의 두번째 데이터는 배이다.

# np.expand_dims : 차수 증가

test_img = np.expand_dims(x_test[1], axis = 0) # 이미지파일 1개

feature_maps = visual_model.predict(test_img) # 예측값

for layer_name, feature_map in zip(get_layer_name, feature_maps) :

# Dense 층 제외

if (len(feature_map.shape) == 4) :

img_size = feature_map.shape[1]

features = feature_map.shape[-1]

# (img_size, img_size)의 feature_map이 features 개수만큼 존재함

display_grid = np.zeros((img_size, img_size*features))

# 각 특징맵을 display_grid 배열에 이어붙인다.

for i in range(features) :

x = feature_map[0, :, :, i]

x -= x.mean()

x /= x.std()

x *= 64; x += 128

# np.clip(x, min, max) : x데이터에서 min보다 작은 값은 min, max보다큰값은 max로 변환

# unit8 : 부호 없는 8비트 정수형, 0 ~ 255까지 값만 저장

x = np.clip(x, 0, 255).astype('unit8')

display_grid[:, i*img_size : (i+1)*img_size] = x

plt.figure(figsize = (features, 2+ 1./features))

plt.title(layer_name, fontsize = 20)

plt.grid(False)

plt.imshow(display_grid, aspect = 'auto', cmap = 'viridis')

feature_maps

array([[[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 1.39482296e+00],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

2.76024416e-02, 0.00000000e+00, 5.43535233e-01],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

1.38139203e-02, 0.00000000e+00, 5.73320389e-01],

...,

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

1.09774694e-02, 0.00000000e+00, 5.79168320e-01],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

1.65029764e-02, 0.00000000e+00, 5.72852731e-01],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

2.69572318e-01, 1.54419020e-01, 6.14448786e-01]],

[[8.70621726e-02, 1.16789293e+00, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 6.19573593e-01],

[0.00000000e+00, 3.38762790e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 2.36449093e-02],

[0.00000000e+00, 3.63755435e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 3.32385749e-02],

...,

[0.00000000e+00, 3.74371648e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 3.94297838e-02],

[0.00000000e+00, 3.64182293e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 3.97360772e-02],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

2.79395968e-01, 1.05052516e-01, 5.69859147e-01]],

[[9.11299512e-02, 1.19996595e+00, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 6.01863384e-01],

[0.00000000e+00, 3.73179615e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 1.75776482e-02],

[0.00000000e+00, 3.93598586e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 3.17991376e-02],

...,

[0.00000000e+00, 4.09143835e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 2.86802649e-02],

[0.00000000e+00, 3.96855444e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 3.05447578e-02],

[0.00000000e+00, 3.17612104e-03, 0.00000000e+00, ...,

2.83317327e-01, 1.04692817e-01, 5.77692389e-01]],

...,

[[0.00000000e+00, 0.00000000e+00, 6.22074790e-02, ...,

1.29832864e-01, 4.22493607e-01, 1.15428813e-01],

[0.00000000e+00, 0.00000000e+00, 1.04348838e-01, ...,

1.16021566e-01, 4.70879734e-01, 1.49464458e-01],

[0.00000000e+00, 0.00000000e+00, 7.95534253e-02, ...,

5.20418696e-02, 3.50514859e-01, 3.41182947e-01],

...,

[1.10264830e-02, 2.37248719e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 1.13192379e-01],

[0.00000000e+00, 2.84808189e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 8.39859173e-02],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

1.35430560e-01, 1.16444044e-02, 4.01980400e-01]],

[[0.00000000e+00, 0.00000000e+00, 5.82160428e-03, ...,

1.23548001e-01, 4.29432690e-01, 1.80128574e-01],

[0.00000000e+00, 0.00000000e+00, 2.48929821e-02, ...,

5.41288555e-02, 4.20156270e-01, 2.42548764e-01],

[0.00000000e+00, 0.00000000e+00, 5.73865324e-03, ...,

8.02730769e-02, 2.72210300e-01, 4.23867196e-01],

...,

[3.34452726e-02, 3.19640160e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 4.02029157e-02],

[0.00000000e+00, 3.15535009e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 4.96883318e-02],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

1.07538484e-01, 1.59807876e-03, 3.95719260e-01]],

[[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

9.56358314e-02, 3.18210661e-01, 4.88872856e-01],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

1.20503813e-01, 3.74177188e-01, 6.73626781e-01],

[0.00000000e+00, 0.00000000e+00, 0.00000000e+00, ...,

2.22301960e-01, 3.52858812e-01, 7.71849155e-01],

...,

[5.10937199e-02, 6.91264212e-01, 9.41429287e-04, ...,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00],

[5.38808331e-02, 7.41093576e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 0.00000000e+00],

[0.00000000e+00, 2.85340041e-01, 0.00000000e+00, ...,

0.00000000e+00, 0.00000000e+00, 1.04997531e-02]]], dtype=float32)

x_test[1].shape

(32, 32, 3)

test_img.shape

(1, 32, 32, 3)

model.summary()

Model: "sequential"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

conv2d (Conv2D) (None, 32, 32, 32) 896

conv2d_1 (Conv2D) (None, 32, 32, 32) 9248

max_pooling2d (MaxPooling2D (None, 16, 16, 32) 0

)

dropout (Dropout) (None, 16, 16, 32) 0

conv2d_2 (Conv2D) (None, 16, 16, 64) 18496

conv2d_3 (Conv2D) (None, 16, 16, 64) 36928

max_pooling2d_1 (MaxPooling (None, 8, 8, 64) 0

2D)

dropout_1 (Dropout) (None, 8, 8, 64) 0

conv2d_4 (Conv2D) (None, 8, 8, 128) 73856

conv2d_5 (Conv2D) (None, 8, 8, 128) 147584

max_pooling2d_2 (MaxPooling (None, 4, 4, 128) 0

2D)

dropout_2 (Dropout) (None, 4, 4, 128) 0

flatten (Flatten) (None, 2048) 0

dense (Dense) (None, 256) 524544

dense_1 (Dense) (None, 10) 2570

=================================================================

Total params: 814,122

Trainable params: 814,122

Non-trainable params: 0

_________________________________________________________________

반응형

'Data_Science > Data_Analysis_Py' 카테고리의 다른 글

| 51. cifar10 || imageDataGenerator (0) | 2021.12.07 |

|---|---|

| 50. ImageDataGenerator (0) | 2021.12.07 |

| 48. Fashion MNIST || convolution (0) | 2021.11.26 |

| 47. Cat image, convolution 설명 (0) | 2021.11.25 |

| 46. 와인품종 예측 딥러닝 이항분류 || (0) | 2021.11.25 |