YOLO (You Only Look Once)

XML 파일 불러오기

Multiple object detection인 경우 정형데이터로 구성할 수 없기 때문에

xml이나 json과 같은 다양한 의미를 지닌 데이터 형식을 불러와야 할 때가 있다

import tensorflow as tf

import xml.etree.ElementTree as ET # xml 형식의 파일을 parsing하는 파이썬 라이브러리

import pathlib

import matplotlib.pyplot as plt

import matplotlib.patches as mptsrc = 'VOCdevkit/VOC2007/Annotations/000005.xml'출처: http://host.robots.ox.ac.uk/pascal/VOC/voc2007/examples/index.html

tree = ET.parse(src) # xml 파알 구조 불러오기 (tree 형태로)

root = tree.getroot() # 시작지점 설정 (root 찾기)root # annotation가 최상위 root (태그)

# <Element 'annotation' at 0x16107dcc0>for i in root.iter('object'): # 5개 object가 있다 / name / pose / truncated /diffiicult / bndbox

print(i)

# <Element 'object' at 0x163992a90>

# <Element 'object' at 0x163992db0>

# <Element 'object' at 0x163995130>

# <Element 'object' at 0x163995450>

# <Element 'object' at 0x163995770>for i in root.iter('object'): # object에 대한 정보만 필요하기 때문에 object태그의 하위 요소만 불러온다

difficult = i.find('difficult').text

cls = i.find('name').textClass 이름 key, value 쌍 만들기

classes = '''

Aeroplanes

Bicycles

Birds

Boats

Bottles

Buses

Cars

Cats

Chairs

Cows

Dining tables

Dogs

Horses

Motorbikes

People

Potted plants

Sheep

Sofas

Trains

TVMonitors

'''classes = [i.lower() for i in classes.strip().split('\n')]classes = [i[:-1] if i[-1]=='s' else i for i in classes]classes

['aeroplane',

'bicycle',

'bird',

'boat',

'bottle',

'buse',

'car',

'cat',

'chair',

'cow',

'dining table',

'dog',

'horse',

'motorbike',

'people',

'potted plant',

'sheep',

'sofa',

'train',

'tvmonitor']classes = ['aeroplane',

'bicycle',

'bird',

'boat',

'bottle',

'bus',

'car',

'cat',

'chair',

'cow',

'diningtable',

'dog',

'horse',

'motorbike',

'people',

'pottedplant',

'sheep',

'sofa',

'train',

'tvmonitor']classes = dict(zip(classes, range(20)))def annote(img_id):

'''

20개 클래스에 해당하는 class id와 4가지 위치 정보를 반환하는 함수

'''

src = f'VOCdevkit/VOC2007/Annotations/{img_id}.xml'

tree = ET.parse(src)

root = tree.getroot()

temp = []

for i in root.iter('object'):

difficult = i.find('difficult').text

cls = i.find('name').text

if cls not in classes: # or int(difficult) == 1

continue

cls_id = classes[cls]

xmlbox = i.find('bndbox')

xmin = int(xmlbox.find('xmin').text)

ymin = int(xmlbox.find('ymin').text)

xmax = int(xmlbox.find('xmax').text)

ymax = int(xmlbox.find('ymax').text)

bb = (xmin,ymin,xmax,ymax)

temp.append((cls_id, bb))

return temppath = pathlib.Path('VOCdevkit/VOC2007/Annotations')for i in path.iterdir():

print(str(i).split('/')[-1].split('.')[0])

007826

002786

006286

002962

...

004488

005796

002947imgs = [str(i).split('/')[-1].split('.')[0] for i in path.iterdir()]

imgs

['007826',

'002786',

'006286',

'002962',

'008297',

...

'004682',

'006095',

'001922',

'000382',

...]'VOCdevkit/VOC2007/JPEGImages/'+imgs[0]+'.jpg'

# 'VOCdevkit/VOC2007/JPEGImages/007826.jpg'temp = [

]

for i in imgs:

temp.append(annote(i))len(temp)

# 5011temp

[[(10, (80, 217, 320, 273)),

(8, (197, 193, 257, 326)),

(8, (139, 184, 185, 231)),

...

[(9, (264, 148, 496, 280)), (12, (98, 123, 237, 248))],

[(0, (65, 75, 470, 269))],

[(3, (3, 167, 163, 500))],

...]annote(imgs[0])

[(10, (80, 217, 320, 273)),

(8, (197, 193, 257, 326)),

(8, (139, 184, 185, 231)),

(8, (258, 180, 312, 314)),

(8, (10, 195, 93, 358)),

(8, (82, 252, 243, 372)),

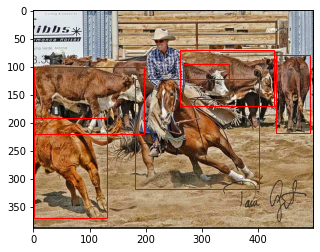

(8, (43, 319, 144, 375))]def show(img_id):

'''

이미지에 bounding box 그리는 함수

'''

im = plt.imread('VOCdevkit/VOC2007/JPEGImages/'+str(imgs[img_id])+'.jpg')

fig, ax = plt.subplots(1,1)

for i in annote(imgs[img_id]):

rec = mpt.Rectangle((i[1][0],i[1][1]), i[1][2]-i[1][0], i[1][3]-i[1][1], edgecolor='red', fill=False)

ax.add_patch(rec)

ax.imshow(im)show(100)

show(1100)

데이터 불러와서 학습 데이터 구성하는 방법 (모델에 학습 데이터를 전달하기 위한/Multiple object)

1. 데이터를 파일로 부터 불러온다

2. 원하는 데이터 구조로 데이터를 가공한다 # ex) 'filename', (10,20,100,200), '1'

3. DataFrame 형태로 변환한다

4. Generator 형태로 만들거나 tf.data.Dataset으로 만든다

# 상속하는 방식(프레임워크에 한정적이지만 tensorflow에 최적화된 기능을 사용할 수 있다) / seqence data처럼 사용할 수 있기 때문에 학습 데이터 유연하게 전달할 수 있다

tf.keras.utils.Sequence

# keras.utils.data_utils.Sequencetf.keras.preprocessing.image_dataset_from_directory()

tf.keras.preprocessing.image.ImageDataGenerator()

flow_from_directory

flow_from_dataframe # geneartor tf.data.DatasetNumpy 형태 데이터를 학습을 하면 자동으로 tensor로 변환하여 학습이 가능하긴 하지만

이러한 tensor는 데이터 전체 메모리를 한꺼번에 올리기 때문에 컴퓨터 성능에 따라 학습이 불가능할 수도 있다

뿐만아니라 메모리를 효율적으로 사용하지 못하기 때문에 학습 속도가 비교적 느릴 수 있다

반면 tf.data.Dataset은 내부적으로 최적화 되어 있기 때문에 cpu, gpu를 동시에 사용하거나

prefetch, cache기법 등 다양한 방법으로 최적화된 학습이 가능하다

따라서 데이터를 generator형태로 불러오거나 tf.data.Dataset으로 변환하는 과정은 중요하다

(단, lazy 방식으로 만들어져 있기 때문에 데이터 하나 확인하는 것이 매우 까다롭다

Network Design

lre = tf.keras.layers.LeakyReLU(alpha=0.1) # 자주 쓰는 것들은 layers에 포함되어 있다

input_ = tf.keras.Input((448,448,3))

x = tf.keras.layers.Conv2D(64,7,2, padding='same', activation=lre)(input_)

x = tf.keras.layers.MaxPool2D(2,2)(x)

x = tf.keras.layers.Conv2D(192,3, padding='same', activation=lre)(x)

x = tf.keras.layers.MaxPool2D(2,2)(x)

x = tf.keras.layers.Conv2D(128,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(256,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(256,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(512,3, padding='same', activation=lre)(x)

x = tf.keras.layers.MaxPool2D(2,2)(x)

x = tf.keras.layers.Conv2D(256,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(512,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(256,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(512,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(256,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(512,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(256,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(512,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(512,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(1024,3, padding='same', activation=lre)(x)

x = tf.keras.layers.MaxPool2D(2,2)(x)

x = tf.keras.layers.Conv2D(512,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(1024,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(512,1, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(1024,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(1024,3,2, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(1024,3, padding='same', activation=lre)(x)

x = tf.keras.layers.Conv2D(1024,3, padding='same', activation=tf.keras.activations.linear)(x)

x = tf.keras.layers.Flatten()(x)

x = tf.keras.layers.Dense(4096, activation=lre)(x)

x = tf.keras.layers.Dropout(0.5)(x)

x = tf.keras.layers.Dense(1470)(x)

x = tf.keras.layers.Reshape((7,7,30))(x)model = tf.keras.models.Model(input_, x)

model.summary()

Model: "model"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_1 (InputLayer) [(None, 448, 448, 3)] 0

_________________________________________________________________

conv2d (Conv2D) (None, 224, 224, 64) 9472

_________________________________________________________________

max_pooling2d (MaxPooling2D) (None, 112, 112, 64) 0

_________________________________________________________________

conv2d_1 (Conv2D) (None, 112, 112, 192) 110784

_________________________________________________________________

max_pooling2d_1 (MaxPooling2 (None, 56, 56, 192) 0

_________________________________________________________________

conv2d_2 (Conv2D) (None, 56, 56, 128) 24704

_________________________________________________________________

conv2d_3 (Conv2D) (None, 56, 56, 256) 295168

_________________________________________________________________

conv2d_4 (Conv2D) (None, 56, 56, 256) 65792

_________________________________________________________________

conv2d_5 (Conv2D) (None, 56, 56, 512) 1180160

_________________________________________________________________

max_pooling2d_2 (MaxPooling2 (None, 28, 28, 512) 0

_________________________________________________________________

conv2d_6 (Conv2D) (None, 28, 28, 256) 131328

_________________________________________________________________

conv2d_7 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

conv2d_8 (Conv2D) (None, 28, 28, 256) 131328

_________________________________________________________________

conv2d_9 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

conv2d_10 (Conv2D) (None, 28, 28, 256) 131328

_________________________________________________________________

conv2d_11 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

conv2d_12 (Conv2D) (None, 28, 28, 256) 131328

_________________________________________________________________

conv2d_13 (Conv2D) (None, 28, 28, 512) 1180160

_________________________________________________________________

conv2d_14 (Conv2D) (None, 28, 28, 512) 262656

_________________________________________________________________

conv2d_15 (Conv2D) (None, 28, 28, 1024) 4719616

_________________________________________________________________

max_pooling2d_3 (MaxPooling2 (None, 14, 14, 1024) 0

_________________________________________________________________

conv2d_16 (Conv2D) (None, 14, 14, 512) 524800

_________________________________________________________________

conv2d_17 (Conv2D) (None, 14, 14, 1024) 4719616

_________________________________________________________________

conv2d_18 (Conv2D) (None, 14, 14, 512) 524800

_________________________________________________________________

conv2d_19 (Conv2D) (None, 14, 14, 1024) 4719616

_________________________________________________________________

conv2d_20 (Conv2D) (None, 7, 7, 1024) 9438208

_________________________________________________________________

conv2d_21 (Conv2D) (None, 7, 7, 1024) 9438208

_________________________________________________________________

conv2d_22 (Conv2D) (None, 7, 7, 1024) 9438208

_________________________________________________________________

flatten (Flatten) (None, 50176) 0

_________________________________________________________________

dense (Dense) (None, 4096) 205524992

_________________________________________________________________

dropout (Dropout) (None, 4096) 0

_________________________________________________________________

dense_1 (Dense) (None, 1470) 6022590

_________________________________________________________________

reshape (Reshape) (None, 7, 7, 30) 0

=================================================================

Total params: 262,265,342

Trainable params: 262,265,342

Non-trainable params: 0

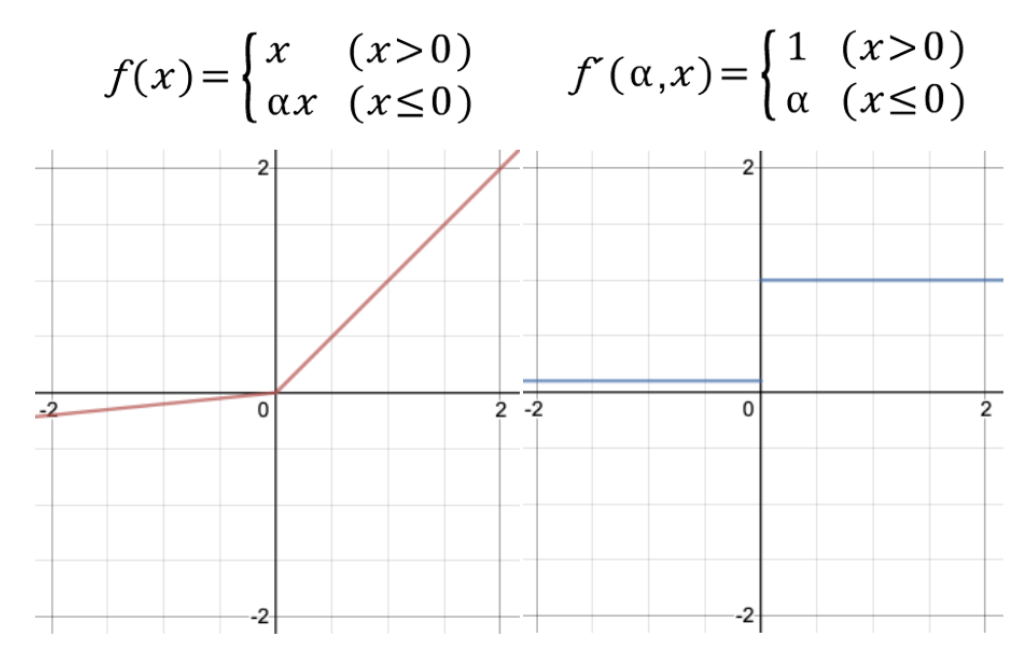

_________________________________________________________________Leaky ReLU

Loss function

10개 -> 두 개의 bounding box / 20개 -> 각각의 클래스 확률 결과를 도출하기 위해서는

Custom layers를 통해 직접 loss function을 구현해야 한다

Regression

Activation function을 linear함수(값의 범위만 정한다)를 사용하거나 사용하지 않으면 Regression 모델을 만들 수 있다

Loss function을 Crossentropy대신 MSE를 사용하면 된다

!pip install -U tensorflow-addons

# 최신 기법 + 중요성이 살짝 떨어지는 것들을 모아둔 추가 패키지 / 기본 tensorflow에 없는 layer를 사용할 수 있다!pip install typeguard# How to install tensorflow addons on mac with m1

!pip install --upgrade --force --no-dependencies https://github.com/apple/tensorflow_macos/releases/download/v0.1alpha3/tensorflow_addons_macos-0.1a3-cp38-cp38-macosx_11_0_arm64.whl

'Computer_Science > Visual Intelligence' 카테고리의 다른 글

| 34일차 - 논문 수업 (VAE) (0) | 2021.11.01 |

|---|---|

| 33일차 - 논문 수업 (VAE) (0) | 2021.11.01 |

| 31일차 - 논문 수업 (Detection) (0) | 2021.11.01 |

| 30일차 논문 수업 (Detection) (0) | 2021.11.01 |

| 30일차 - 논문 수업 (Detection) (0) | 2021.10.24 |