텐서플로우를 통한 OR게이트

import tensorflow as tf

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.losses import mse

tf.random.set_seed(777)

# 데이터

data = np.array([[0,0],[1,0],[0,1],[1,1]])

# 라벨링

label = np.array([[0],[1],[1],[1]])

model = Sequential()

model.add(Dense(1, input_shape = (2,), activation = 'linear')) # 퍼셉트론

# 모델설정

# GD 경사하강법, SGD stochastic :minibatch

# loss 손실함수, 비용함수

model.compile(optimizer = SGD(), loss = mse, metrics = ['acc'])

# epochs 100으로 하면 학습하다가 관둬서, 200으로 함

model.fit(data, label, epochs = 200)

# 그 값은 이거야

model.get_weights()

# 머신러닝은 값 하나하나 매겨주는데 딥러닝은 라벨링하고 주면 알아서 찾아감

model.predict(data)

model.evaluate(data, label) # 평가, 손실함수, 정확도

Epoch 1/200

1/1 [==============================] - 1s 1s/step - loss: 1.4290 - acc: 0.5000

Epoch 2/200

1/1 [==============================] - 0s 3ms/step - loss: 1.3602 - acc: 0.5000

Epoch 3/200

1/1 [==============================] - 0s 2ms/step - loss: 1.2956 - acc: 0.5000

Epoch 4/200

1/1 [==============================] - 0s 2ms/step - loss: 1.2349 - acc: 0.5000

Epoch 5/200

1/1 [==============================] - 0s 2ms/step - loss: 1.1779 - acc: 0.5000

Epoch 6/200

1/1 [==============================] - 0s 3ms/step - loss: 1.1242 - acc: 0.5000

Epoch 7/200

1/1 [==============================] - 0s 2ms/step - loss: 1.0738 - acc: 0.5000

Epoch 8/200

1/1 [==============================] - 0s 3ms/step - loss: 1.0264 - acc: 0.5000

Epoch 9/200

1/1 [==============================] - 0s 3ms/step - loss: 0.9819 - acc: 0.5000

Epoch 10/200

1/1 [==============================] - 0s 2ms/step - loss: 0.9399 - acc: 0.5000

Epoch 11/200

1/1 [==============================] - 0s 3ms/step - loss: 0.9005 - acc: 0.5000

Epoch 12/200

1/1 [==============================] - 0s 2ms/step - loss: 0.8634 - acc: 0.5000

Epoch 13/200

1/1 [==============================] - 0s 2ms/step - loss: 0.8284 - acc: 0.5000

Epoch 14/200

1/1 [==============================] - 0s 3ms/step - loss: 0.7955 - acc: 0.5000

Epoch 15/200

1/1 [==============================] - 0s 2ms/step - loss: 0.7646 - acc: 0.5000

Epoch 16/200

1/1 [==============================] - 0s 2ms/step - loss: 0.7354 - acc: 0.5000

Epoch 17/200

1/1 [==============================] - 0s 1000us/step - loss: 0.7079 - acc: 0.5000

Epoch 18/200

1/1 [==============================] - 0s 2ms/step - loss: 0.6820 - acc: 0.5000

Epoch 19/200

1/1 [==============================] - 0s 2ms/step - loss: 0.6576 - acc: 0.5000

Epoch 20/200

1/1 [==============================] - 0s 3ms/step - loss: 0.6346 - acc: 0.5000

Epoch 21/200

1/1 [==============================] - 0s 2ms/step - loss: 0.6129 - acc: 0.5000

Epoch 22/200

1/1 [==============================] - 0s 2ms/step - loss: 0.5925 - acc: 0.5000

Epoch 23/200

1/1 [==============================] - 0s 2ms/step - loss: 0.5732 - acc: 0.5000

Epoch 24/200

1/1 [==============================] - 0s 2ms/step - loss: 0.5549 - acc: 0.5000

Epoch 25/200

1/1 [==============================] - 0s 2ms/step - loss: 0.5377 - acc: 0.5000

Epoch 26/200

1/1 [==============================] - 0s 2ms/step - loss: 0.5215 - acc: 0.5000

Epoch 27/200

1/1 [==============================] - 0s 2ms/step - loss: 0.5061 - acc: 0.5000

Epoch 28/200

1/1 [==============================] - 0s 3ms/step - loss: 0.4916 - acc: 0.5000

Epoch 29/200

1/1 [==============================] - 0s 2ms/step - loss: 0.4778 - acc: 0.5000

Epoch 30/200

1/1 [==============================] - 0s 3ms/step - loss: 0.4648 - acc: 0.5000

Epoch 31/200

1/1 [==============================] - 0s 2ms/step - loss: 0.4525 - acc: 0.7500

Epoch 32/200

1/1 [==============================] - 0s 2ms/step - loss: 0.4409 - acc: 0.7500

Epoch 33/200

1/1 [==============================] - 0s 2ms/step - loss: 0.4298 - acc: 0.7500

Epoch 34/200

1/1 [==============================] - 0s 2ms/step - loss: 0.4193 - acc: 0.7500

Epoch 35/200

1/1 [==============================] - 0s 2ms/step - loss: 0.4094 - acc: 0.7500

Epoch 36/200

1/1 [==============================] - 0s 3ms/step - loss: 0.4000 - acc: 0.7500

Epoch 37/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3911 - acc: 0.7500

Epoch 38/200

1/1 [==============================] - 0s 3ms/step - loss: 0.3826 - acc: 0.7500

Epoch 39/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3745 - acc: 0.7500

Epoch 40/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3668 - acc: 0.7500

Epoch 41/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3595 - acc: 0.7500

Epoch 42/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3525 - acc: 0.7500

Epoch 43/200

1/1 [==============================] - 0s 3ms/step - loss: 0.3459 - acc: 0.7500

Epoch 44/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3396 - acc: 0.7500

Epoch 45/200

1/1 [==============================] - 0s 3ms/step - loss: 0.3336 - acc: 0.7500

Epoch 46/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3278 - acc: 0.7500

Epoch 47/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3223 - acc: 0.7500

Epoch 48/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3170 - acc: 0.7500

Epoch 49/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3120 - acc: 0.7500

Epoch 50/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3072 - acc: 0.7500

Epoch 51/200

1/1 [==============================] - 0s 2ms/step - loss: 0.3026 - acc: 0.7500

Epoch 52/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2982 - acc: 0.7500

Epoch 53/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2939 - acc: 0.7500

Epoch 54/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2898 - acc: 0.7500

Epoch 55/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2859 - acc: 0.7500

Epoch 56/200

1/1 [==============================] - 0s 1ms/step - loss: 0.2822 - acc: 0.7500

Epoch 57/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2785 - acc: 0.7500

Epoch 58/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2750 - acc: 0.7500

Epoch 59/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2717 - acc: 0.7500

Epoch 60/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2684 - acc: 0.7500

Epoch 61/200

1/1 [==============================] - 0s 3ms/step - loss: 0.2653 - acc: 0.7500

Epoch 62/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2623 - acc: 0.7500

Epoch 63/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2594 - acc: 0.7500

Epoch 64/200

1/1 [==============================] - 0s 1ms/step - loss: 0.2565 - acc: 0.7500

Epoch 65/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2538 - acc: 0.7500

Epoch 66/200

1/1 [==============================] - 0s 3ms/step - loss: 0.2511 - acc: 0.7500

Epoch 67/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2486 - acc: 0.7500

Epoch 68/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2461 - acc: 0.7500

Epoch 69/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2436 - acc: 0.7500

Epoch 70/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2413 - acc: 0.7500

Epoch 71/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2390 - acc: 0.7500

Epoch 72/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2368 - acc: 0.7500

Epoch 73/200

1/1 [==============================] - 0s 3ms/step - loss: 0.2346 - acc: 0.7500

Epoch 74/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2325 - acc: 0.7500

Epoch 75/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2304 - acc: 0.7500

Epoch 76/200

1/1 [==============================] - 0s 3ms/step - loss: 0.2284 - acc: 0.7500

Epoch 77/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2264 - acc: 0.7500

Epoch 78/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2245 - acc: 0.7500

Epoch 79/200

1/1 [==============================] - 0s 1ms/step - loss: 0.2226 - acc: 0.7500

Epoch 80/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2208 - acc: 0.7500

Epoch 81/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2190 - acc: 0.7500

Epoch 82/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2173 - acc: 0.7500

Epoch 83/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2155 - acc: 0.7500

Epoch 84/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2139 - acc: 0.7500

Epoch 85/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2122 - acc: 0.7500

Epoch 86/200

1/1 [==============================] - 0s 3ms/step - loss: 0.2106 - acc: 0.7500

Epoch 87/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2090 - acc: 0.7500

Epoch 88/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2074 - acc: 0.7500

Epoch 89/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2059 - acc: 0.7500

Epoch 90/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2044 - acc: 0.7500

Epoch 91/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2029 - acc: 0.7500

Epoch 92/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2015 - acc: 0.7500

Epoch 93/200

1/1 [==============================] - 0s 2ms/step - loss: 0.2000 - acc: 0.7500

Epoch 94/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1986 - acc: 0.7500

Epoch 95/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1973 - acc: 0.7500

Epoch 96/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1959 - acc: 0.7500

Epoch 97/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1946 - acc: 0.7500

Epoch 98/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1932 - acc: 0.7500

Epoch 99/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1919 - acc: 0.7500

Epoch 100/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1907 - acc: 0.7500

Epoch 101/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1894 - acc: 0.7500

Epoch 102/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1881 - acc: 0.7500

Epoch 103/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1869 - acc: 0.7500

Epoch 104/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1857 - acc: 0.7500

Epoch 105/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1845 - acc: 0.7500

Epoch 106/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1833 - acc: 0.7500

Epoch 107/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1822 - acc: 0.7500

Epoch 108/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1810 - acc: 0.7500

Epoch 109/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1799 - acc: 0.7500

Epoch 110/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1787 - acc: 0.7500

Epoch 111/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1776 - acc: 0.7500

Epoch 112/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1765 - acc: 0.7500

Epoch 113/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1755 - acc: 0.7500

Epoch 114/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1744 - acc: 0.7500

Epoch 115/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1733 - acc: 0.7500

Epoch 116/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1723 - acc: 0.7500

Epoch 117/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1712 - acc: 0.7500

Epoch 118/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1702 - acc: 0.7500

Epoch 119/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1692 - acc: 0.7500

Epoch 120/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1682 - acc: 0.7500

Epoch 121/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1672 - acc: 0.7500

Epoch 122/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1663 - acc: 0.7500

Epoch 123/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1653 - acc: 0.7500

Epoch 124/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1643 - acc: 0.7500

Epoch 125/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1634 - acc: 0.7500

Epoch 126/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1625 - acc: 0.7500

Epoch 127/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1615 - acc: 0.7500

Epoch 128/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1606 - acc: 0.7500

Epoch 129/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1597 - acc: 0.7500

Epoch 130/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1588 - acc: 0.7500

Epoch 131/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1579 - acc: 0.7500

Epoch 132/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1570 - acc: 0.7500

Epoch 133/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1562 - acc: 0.7500

Epoch 134/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1553 - acc: 0.7500

Epoch 135/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1545 - acc: 0.7500

Epoch 136/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1536 - acc: 0.7500

Epoch 137/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1528 - acc: 0.7500

Epoch 138/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1520 - acc: 0.7500

Epoch 139/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1511 - acc: 0.7500

Epoch 140/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1503 - acc: 0.7500

Epoch 141/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1495 - acc: 0.7500

Epoch 142/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1487 - acc: 0.7500

Epoch 143/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1480 - acc: 0.7500

Epoch 144/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1472 - acc: 0.7500

Epoch 145/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1464 - acc: 0.7500

Epoch 146/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1456 - acc: 0.7500

Epoch 147/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1449 - acc: 0.7500

Epoch 148/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1441 - acc: 0.7500

Epoch 149/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1434 - acc: 0.7500

Epoch 150/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1427 - acc: 0.7500

Epoch 151/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1419 - acc: 0.7500

Epoch 152/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1412 - acc: 0.7500

Epoch 153/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1405 - acc: 0.7500

Epoch 154/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1398 - acc: 0.7500

Epoch 155/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1391 - acc: 0.7500

Epoch 156/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1384 - acc: 0.7500

Epoch 157/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1377 - acc: 0.7500

Epoch 158/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1370 - acc: 0.7500

Epoch 159/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1364 - acc: 0.7500

Epoch 160/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1357 - acc: 0.7500

Epoch 161/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1350 - acc: 0.7500

Epoch 162/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1344 - acc: 0.7500

Epoch 163/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1337 - acc: 0.7500

Epoch 164/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1331 - acc: 0.7500

Epoch 165/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1325 - acc: 0.7500

Epoch 166/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1318 - acc: 0.7500

Epoch 167/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1312 - acc: 0.7500

Epoch 168/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1306 - acc: 0.7500

Epoch 169/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1300 - acc: 0.7500

Epoch 170/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1294 - acc: 0.7500

Epoch 171/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1288 - acc: 0.7500

Epoch 172/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1282 - acc: 0.7500

Epoch 173/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1276 - acc: 0.7500

Epoch 174/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1270 - acc: 0.7500

Epoch 175/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1264 - acc: 0.7500

Epoch 176/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1258 - acc: 0.7500

Epoch 177/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1253 - acc: 0.7500

Epoch 178/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1247 - acc: 0.7500

Epoch 179/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1242 - acc: 0.7500

Epoch 180/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1236 - acc: 0.7500

Epoch 181/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1231 - acc: 0.7500

Epoch 182/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1225 - acc: 0.7500

Epoch 183/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1220 - acc: 0.7500

Epoch 184/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1214 - acc: 0.7500

Epoch 185/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1209 - acc: 0.7500

Epoch 186/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1204 - acc: 0.7500

Epoch 187/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1199 - acc: 0.7500

Epoch 188/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1194 - acc: 0.7500

Epoch 189/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1189 - acc: 0.7500

Epoch 190/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1184 - acc: 0.7500

Epoch 191/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1179 - acc: 0.7500

Epoch 192/200

1/1 [==============================] - 0s 3ms/step - loss: 0.1174 - acc: 1.0000

Epoch 193/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1169 - acc: 1.0000

Epoch 194/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1164 - acc: 1.0000

Epoch 195/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1159 - acc: 1.0000

Epoch 196/200

1/1 [==============================] - 0s 1ms/step - loss: 0.1154 - acc: 1.0000

Epoch 197/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1150 - acc: 1.0000

Epoch 198/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1145 - acc: 1.0000

Epoch 199/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1140 - acc: 1.0000

Epoch 200/200

1/1 [==============================] - 0s 2ms/step - loss: 0.1136 - acc: 1.0000

1/1 [==============================] - 0s 78ms/step - loss: 0.1131 - acc: 1.0000

[0.11312820017337799, 1.0]

텐서플로우를 통한 AND게이트

import tensorflow as tf

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.losses import mse

tf.random.set_seed(777)

# 데이터

data = np.array([[0,0],[1,0],[0,1],[1,1]])

# 라벨링

label = np.array([[0],[0],[0],[1]]) # AND 구현

model = Sequential()

model.add(Dense(1, input_shape = (2,), activation = 'linear')) # 퍼셉트론

model.compile(optimizer = SGD(), loss = mse, metrics = ['acc'])

model.fit(data, label, epochs = 2000, verbose = 0)

model.get_weights()

model.predict(data).flatten()

model.evaluate(data, label) # 평가, 손실함수, 정확도

1/1 [==============================] - 0s 53ms/step - loss: 0.0625 - acc: 1.0000

[0.06250044703483582, 1.0]

from tensorflow import keras

import numpy

x = numpy.array([0,1,2,3,4])

y = x*2+1 # [1,3,5,7,9]

model = keras.models.Sequential()

# 1개층 만들기

model.add(keras.layers.Dense(1, input_shape=(1,)))

# 로스함수 : mse, 활성화함수는 default linear

model.compile('SGD','mse')

# verbose = 0 학습내용 안보여줌

model.fit(x[:2], y[:2], epochs=1000, verbose=0)

model.get_weights()

# [array([[1.9739313]], dtype=float32), 가중치 : array([1.0161117], dtype=float32)]

# 0, 1 중 어디에 더가까운가?

# 이후값

model.predict(x[2:])

array([[5.000806],

[7.001389],

[9.001972]], dtype=float32)

model.predict([5])

# array([[11.002556]], dtype=float32)

# 백터 내적

from tensorflow import keras

import numpy

# 난수발생기, 10행 5열 실수형 난수

# 난수의 갯수분포가 균등하게 생성

x = tf.random.uniform((10,5)) # 세로랑

w = tf.random.uniform((5,3)) # 가로랑 곱

# 행렬 곱

d = tf.matmul(x, w)

print(d.shape)

# (10, 3)

x

<tf.Tensor: shape=(10, 5), dtype=float32, numpy=

array([[0.1357429 , 0.07509017, 0.2639438 , 0.47604764, 0.39591897],

[0.14548802, 0.17393434, 0.00936472, 0.8090905 , 0.617025 ],

[0.8713819 , 0.558359 , 0.17226672, 0.50340676, 0.18701088],

[0.9073597 , 0.717615 , 0.38108468, 0.8958354 , 0.59624827],

[0.77847326, 0.4488796 , 0.14225698, 0.8686327 , 0.03972971],

[0.3629743 , 0.55276537, 0.3255931 , 0.5238236 , 0.05080891],

[0.01347697, 0.3558432 , 0.77311885, 0.48737752, 0.5625943 ],

[0.02250803, 0.8551339 , 0.36489332, 0.5632981 , 0.09144831],

[0.25097954, 0.5333061 , 0.426386 , 0.19805324, 0.28281295],

[0.99601805, 0.4646746 , 0.0783782 , 0.66289246, 0.17973018]],

dtype=float32)>

# LINEAR로 구현이 안됨

# 텐서플로우를 통한 XOR게이트

import tensorflow as tf

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import SGD

from tensorflow.keras.losses import mse

tf.random.set_seed(777)

# 데이터

data = np.array([[0,0],[1,0],[0,1],[1,1]])

# 라벨링

label = np.array([[0],[1],[1],[0]])

model = Sequential()

model.add(Dense(1, input_shape = (2,), activation = 'linear')) # 퍼셉트론

model.compile(optimizer = SGD(), loss = mse, metrics = ['acc'])

model.fit(data, label, epochs = 2000, verbose = 0)

model.get_weights()

model.predict(data).flatten()

model.evaluate(data, label) # 평가, 손실함수, 정확도

# 1/1 [==============================] - 0s 52ms/step - loss: 0.2500 - acc: 0.5000

# 손실도 0.25, 정확도 0.5 => LINEAR로 는 실패,

1/1 [==============================] - 0s 52ms/step - loss: 0.2500 - acc: 0.5000

[0.25, 0.5]텐서플로우를 통한 XOR게이트

import tensorflow as tf

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import RMSprop, SGD

from tensorflow.keras.losses import mse

tf.random.set_seed(777)

# 데이터

data = np.array([[0,0],[1,0],[0,1],[1,1]])

# 라벨링

label = np.array([[0],[1],[1],[0]])

model = Sequential()

# 2개층

# 32개층, 활성화함수 relu

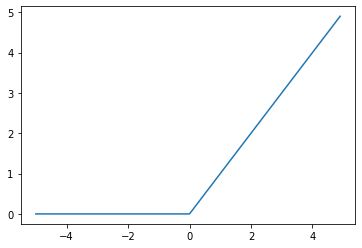

# relu 음수는 0, 양수는 그대로

model.add(Dense(32, input_shape = (2,), activation = 'relu')) # 퍼셉트론

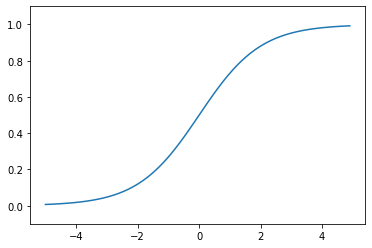

# sigmoid는 0 ~ 1.0 이상의 값리턴

model.add(Dense(1, activation = 'sigmoid')) # 퍼셉트론

# optimizer 최적 위치 찾아가는 방식

# RMSprop : adagrad 알고리즘 보완 : 이전값을 참조해서, 해당 보폭을 찾음

# adagrad : 처음 첩근시 큰보폭, 가본곳은 작은 보폭 =

# 많은 변동시에 학습률이 감소될 수 있음

# model.compile(optimizer = RMSprop(), loss = mse, metrics = ['acc'])

model.compile(optimizer = SGD(), loss = mse, metrics = ['acc'])

model.fit(data, label, epochs = 100)

model.get_weights()

predict = model.predict(data).flatten()

model.evaluate(data, label) # 평가, 손실함수, 정확도

print(predict)

# 1/1 [==============================] - 0s 56ms/step - loss: 0.2106 - acc: 1.0000

# [0.48657197 0.54643464 0.55219495 0.44657207]

Epoch 1/100

1/1 [==============================] - 0s 163ms/step - loss: 0.2646 - acc: 0.5000

Epoch 2/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2644 - acc: 0.2500

Epoch 3/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2643 - acc: 0.2500

Epoch 4/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2642 - acc: 0.2500

Epoch 5/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2640 - acc: 0.2500

Epoch 6/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2639 - acc: 0.2500

Epoch 7/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2638 - acc: 0.2500

Epoch 8/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2637 - acc: 0.2500

Epoch 9/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2635 - acc: 0.2500

Epoch 10/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2634 - acc: 0.2500

Epoch 11/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2633 - acc: 0.2500

Epoch 12/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2632 - acc: 0.2500

Epoch 13/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2630 - acc: 0.2500

Epoch 14/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2629 - acc: 0.2500

Epoch 15/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2628 - acc: 0.2500

Epoch 16/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2627 - acc: 0.2500

Epoch 17/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2625 - acc: 0.2500

Epoch 18/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2624 - acc: 0.2500

Epoch 19/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2623 - acc: 0.2500

Epoch 20/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2622 - acc: 0.2500

Epoch 21/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2620 - acc: 0.2500

Epoch 22/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2619 - acc: 0.2500

Epoch 23/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2618 - acc: 0.2500

Epoch 24/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2617 - acc: 0.2500

Epoch 25/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2616 - acc: 0.2500

Epoch 26/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2614 - acc: 0.2500

Epoch 27/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2613 - acc: 0.2500

Epoch 28/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2612 - acc: 0.2500

Epoch 29/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2611 - acc: 0.2500

Epoch 30/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2610 - acc: 0.2500

Epoch 31/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2608 - acc: 0.2500

Epoch 32/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2607 - acc: 0.2500

Epoch 33/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2606 - acc: 0.2500

Epoch 34/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2605 - acc: 0.2500

Epoch 35/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2604 - acc: 0.2500

Epoch 36/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2602 - acc: 0.2500

Epoch 37/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2601 - acc: 0.2500

Epoch 38/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2600 - acc: 0.2500

Epoch 39/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2599 - acc: 0.2500

Epoch 40/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2598 - acc: 0.2500

Epoch 41/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2597 - acc: 0.2500

Epoch 42/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2596 - acc: 0.2500

Epoch 43/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2594 - acc: 0.2500

Epoch 44/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2593 - acc: 0.2500

Epoch 45/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2592 - acc: 0.2500

Epoch 46/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2591 - acc: 0.2500

Epoch 47/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2590 - acc: 0.2500

Epoch 48/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2589 - acc: 0.2500

Epoch 49/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2588 - acc: 0.2500

Epoch 50/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2586 - acc: 0.2500

Epoch 51/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2585 - acc: 0.2500

Epoch 52/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2584 - acc: 0.2500

Epoch 53/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2583 - acc: 0.2500

Epoch 54/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2582 - acc: 0.2500

Epoch 55/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2581 - acc: 0.2500

Epoch 56/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2580 - acc: 0.2500

Epoch 57/100

1/1 [==============================] - 0s 5ms/step - loss: 0.2579 - acc: 0.2500

Epoch 58/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2578 - acc: 0.2500

Epoch 59/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2576 - acc: 0.2500

Epoch 60/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2575 - acc: 0.2500

Epoch 61/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2574 - acc: 0.2500

Epoch 62/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2573 - acc: 0.2500

Epoch 63/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2572 - acc: 0.2500

Epoch 64/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2571 - acc: 0.2500

Epoch 65/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2570 - acc: 0.2500

Epoch 66/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2569 - acc: 0.2500

Epoch 67/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2568 - acc: 0.2500

Epoch 68/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2567 - acc: 0.2500

Epoch 69/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2566 - acc: 0.2500

Epoch 70/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2565 - acc: 0.2500

Epoch 71/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2563 - acc: 0.2500

Epoch 72/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2562 - acc: 0.2500

Epoch 73/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2561 - acc: 0.2500

Epoch 74/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2560 - acc: 0.2500

Epoch 75/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2559 - acc: 0.2500

Epoch 76/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2558 - acc: 0.2500

Epoch 77/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2557 - acc: 0.2500

Epoch 78/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2556 - acc: 0.2500

Epoch 79/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2555 - acc: 0.2500

Epoch 80/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2554 - acc: 0.2500

Epoch 81/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2553 - acc: 0.2500

Epoch 82/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2552 - acc: 0.2500

Epoch 83/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2551 - acc: 0.2500

Epoch 84/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2550 - acc: 0.2500

Epoch 85/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2549 - acc: 0.2500

Epoch 86/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2548 - acc: 0.2500

Epoch 87/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2547 - acc: 0.2500

Epoch 88/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2546 - acc: 0.2500

Epoch 89/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2545 - acc: 0.2500

Epoch 90/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2544 - acc: 0.2500

Epoch 91/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2543 - acc: 0.2500

Epoch 92/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2542 - acc: 0.2500

Epoch 93/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2541 - acc: 0.2500

Epoch 94/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2540 - acc: 0.2500

Epoch 95/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2539 - acc: 0.2500

Epoch 96/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2538 - acc: 0.2500

Epoch 97/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2537 - acc: 0.2500

Epoch 98/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2536 - acc: 0.2500

Epoch 99/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2535 - acc: 0.2500

Epoch 100/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2534 - acc: 0.2500

WARNING:tensorflow:8 out of the last 9 calls to <function Model.make_predict_function.<locals>.predict_function at 0x0000026102109700> triggered tf.function retracing. Tracing is expensive and the excessive number of tracings could be due to (1) creating @tf.function repeatedly in a loop, (2) passing tensors with different shapes, (3) passing Python objects instead of tensors. For (1), please define your @tf.function outside of the loop. For (2), @tf.function has experimental_relax_shapes=True option that relaxes argument shapes that can avoid unnecessary retracing. For (3), please refer to https://www.tensorflow.org/guide/function#controlling_retracing and https://www.tensorflow.org/api_docs/python/tf/function for more details.

WARNING:tensorflow:7 out of the last 7 calls to <function Model.make_test_function.<locals>.test_function at 0x0000026102109B80> triggered tf.function retracing. Tracing is expensive and the excessive number of tracings could be due to (1) creating @tf.function repeatedly in a loop, (2) passing tensors with different shapes, (3) passing Python objects instead of tensors. For (1), please define your @tf.function outside of the loop. For (2), @tf.function has experimental_relax_shapes=True option that relaxes argument shapes that can avoid unnecessary retracing. For (3), please refer to https://www.tensorflow.org/guide/function#controlling_retracing and https://www.tensorflow.org/api_docs/python/tf/function for more details.

1/1 [==============================] - 0s 56ms/step - loss: 0.2533 - acc: 0.2500

[0.50530165 0.44862053 0.49225846 0.442587 ]

import tensorflow as tf

import numpy as np

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense

from tensorflow.keras.optimizers import RMSprop, SGD

from tensorflow.keras.losses import mse

tf.random.set_seed(777)

# 데이터

data = np.array([[0,0],[1,0],[0,1],[1,1]])

# 라벨링

label = np.array([[0],[1],[1],[0]])

model = Sequential()

# 2개층

# 32개층, 활성화함수 relu

# Dense 층쌓기

# relu 음수는 0, 양수는 그대로

model.add(Dense(32, input_shape = (2,), activation = 'relu')) # 퍼셉트론

# sigmoid는 0 ~ 1.0 이상의 값리턴

model.add(Dense(1, activation = 'sigmoid')) # 퍼셉트론

# optimizer 최적 위치 찾아가는 방식

# RMSprop : adagrad 알고리즘 보완 : 이전값을 참조해서, 해당 보폭을 찾음

# adagrad : 처음 첩근시 큰보폭, 가본곳은 작은 보폭 =

# 많은 변동시에 학습률이 감소될 수 있음

# model.compile(optimizer = RMSprop(), loss = mse, metrics = ['acc'])

model.compile(optimizer = SGD(), loss = mse, metrics = ['acc'])

model.fit(data, label, epochs = 100)

model.get_weights()

predict = model.predict(data).flatten()

model.evaluate(data, label) # 평가, 손실함수, 정확도

print(predict)

# 1/1 [==============================] - 0s 249ms/step - loss: 0.2533 - acc: 0.2500

# [0.50530165 0.44862053 0.49225846 0.442587 ]

Epoch 1/100

1/1 [==============================] - 0s 161ms/step - loss: 0.2646 - acc: 0.5000

Epoch 2/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2644 - acc: 0.2500

Epoch 3/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2643 - acc: 0.2500

Epoch 4/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2642 - acc: 0.2500

Epoch 5/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2640 - acc: 0.2500

Epoch 6/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2639 - acc: 0.2500

Epoch 7/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2638 - acc: 0.2500

Epoch 8/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2637 - acc: 0.2500

Epoch 9/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2635 - acc: 0.2500

Epoch 10/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2634 - acc: 0.2500

Epoch 11/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2633 - acc: 0.2500

Epoch 12/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2632 - acc: 0.2500

Epoch 13/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2630 - acc: 0.2500

Epoch 14/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2629 - acc: 0.2500

Epoch 15/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2628 - acc: 0.2500

Epoch 16/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2627 - acc: 0.2500

Epoch 17/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2625 - acc: 0.2500

Epoch 18/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2624 - acc: 0.2500

Epoch 19/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2623 - acc: 0.2500

Epoch 20/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2622 - acc: 0.2500

Epoch 21/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2620 - acc: 0.2500

Epoch 22/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2619 - acc: 0.2500

Epoch 23/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2618 - acc: 0.2500

Epoch 24/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2617 - acc: 0.2500

Epoch 25/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2616 - acc: 0.2500

Epoch 26/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2614 - acc: 0.2500

Epoch 27/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2613 - acc: 0.2500

Epoch 28/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2612 - acc: 0.2500

Epoch 29/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2611 - acc: 0.2500

Epoch 30/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2610 - acc: 0.2500

Epoch 31/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2608 - acc: 0.2500

Epoch 32/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2607 - acc: 0.2500

Epoch 33/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2606 - acc: 0.2500

Epoch 34/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2605 - acc: 0.2500

Epoch 35/100

1/1 [==============================] - 0s 8ms/step - loss: 0.2604 - acc: 0.2500

Epoch 36/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2602 - acc: 0.2500

Epoch 37/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2601 - acc: 0.2500

Epoch 38/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2600 - acc: 0.2500

Epoch 39/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2599 - acc: 0.2500

Epoch 40/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2598 - acc: 0.2500

Epoch 41/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2597 - acc: 0.2500

Epoch 42/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2596 - acc: 0.2500

Epoch 43/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2594 - acc: 0.2500

Epoch 44/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2593 - acc: 0.2500

Epoch 45/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2592 - acc: 0.2500

Epoch 46/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2591 - acc: 0.2500

Epoch 47/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2590 - acc: 0.2500

Epoch 48/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2589 - acc: 0.2500

Epoch 49/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2588 - acc: 0.2500

Epoch 50/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2586 - acc: 0.2500

Epoch 51/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2585 - acc: 0.2500

Epoch 52/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2584 - acc: 0.2500

Epoch 53/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2583 - acc: 0.2500

Epoch 54/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2582 - acc: 0.2500

Epoch 55/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2581 - acc: 0.2500

Epoch 56/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2580 - acc: 0.2500

Epoch 57/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2579 - acc: 0.2500

Epoch 58/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2578 - acc: 0.2500

Epoch 59/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2576 - acc: 0.2500

Epoch 60/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2575 - acc: 0.2500

Epoch 61/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2574 - acc: 0.2500

Epoch 62/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2573 - acc: 0.2500

Epoch 63/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2572 - acc: 0.2500

Epoch 64/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2571 - acc: 0.2500

Epoch 65/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2570 - acc: 0.2500

Epoch 66/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2569 - acc: 0.2500

Epoch 67/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2568 - acc: 0.2500

Epoch 68/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2567 - acc: 0.2500

Epoch 69/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2566 - acc: 0.2500

Epoch 70/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2565 - acc: 0.2500

Epoch 71/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2563 - acc: 0.2500

Epoch 72/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2562 - acc: 0.2500

Epoch 73/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2561 - acc: 0.2500

Epoch 74/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2560 - acc: 0.2500

Epoch 75/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2559 - acc: 0.2500

Epoch 76/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2558 - acc: 0.2500

Epoch 77/100

1/1 [==============================] - 0s 4ms/step - loss: 0.2557 - acc: 0.2500

Epoch 78/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2556 - acc: 0.2500

Epoch 79/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2555 - acc: 0.2500

Epoch 80/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2554 - acc: 0.2500

Epoch 81/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2553 - acc: 0.2500

Epoch 82/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2552 - acc: 0.2500

Epoch 83/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2551 - acc: 0.2500

Epoch 84/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2550 - acc: 0.2500

Epoch 85/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2549 - acc: 0.2500

Epoch 86/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2548 - acc: 0.2500

Epoch 87/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2547 - acc: 0.2500

Epoch 88/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2546 - acc: 0.2500

Epoch 89/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2545 - acc: 0.2500

Epoch 90/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2544 - acc: 0.2500

Epoch 91/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2543 - acc: 0.2500

Epoch 92/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2542 - acc: 0.2500

Epoch 93/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2541 - acc: 0.2500

Epoch 94/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2540 - acc: 0.2500

Epoch 95/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2539 - acc: 0.2500

Epoch 96/100

1/1 [==============================] - 0s 3ms/step - loss: 0.2538 - acc: 0.2500

Epoch 97/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2537 - acc: 0.2500

Epoch 98/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2536 - acc: 0.2500

Epoch 99/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2535 - acc: 0.2500

Epoch 100/100

1/1 [==============================] - 0s 2ms/step - loss: 0.2534 - acc: 0.2500

WARNING:tensorflow:9 out of the last 10 calls to <function Model.make_predict_function.<locals>.predict_function at 0x000002610365D0D0> triggered tf.function retracing. Tracing is expensive and the excessive number of tracings could be due to (1) creating @tf.function repeatedly in a loop, (2) passing tensors with different shapes, (3) passing Python objects instead of tensors. For (1), please define your @tf.function outside of the loop. For (2), @tf.function has experimental_relax_shapes=True option that relaxes argument shapes that can avoid unnecessary retracing. For (3), please refer to https://www.tensorflow.org/guide/function#controlling_retracing and https://www.tensorflow.org/api_docs/python/tf/function for more details.

WARNING:tensorflow:8 out of the last 8 calls to <function Model.make_test_function.<locals>.test_function at 0x000002610365DEE0> triggered tf.function retracing. Tracing is expensive and the excessive number of tracings could be due to (1) creating @tf.function repeatedly in a loop, (2) passing tensors with different shapes, (3) passing Python objects instead of tensors. For (1), please define your @tf.function outside of the loop. For (2), @tf.function has experimental_relax_shapes=True option that relaxes argument shapes that can avoid unnecessary retracing. For (3), please refer to https://www.tensorflow.org/guide/function#controlling_retracing and https://www.tensorflow.org/api_docs/python/tf/function for more details.

1/1 [==============================] - 0s 249ms/step - loss: 0.2533 - acc: 0.2500

[0.50530165 0.44862053 0.49225846 0.442587 ]

'Data_Science > Data_Analysis_Py' 카테고리의 다른 글

| 42. Fashion-MNIST 딥러닝 예측 (0) | 2021.11.25 |

|---|---|

| 41. MNIST 딥러닝 예측 (0) | 2021.11.25 |

| 39. Tensorflow 구현 (0) | 2021.11.25 |

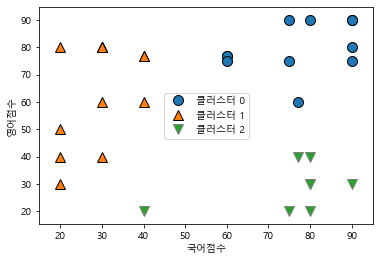

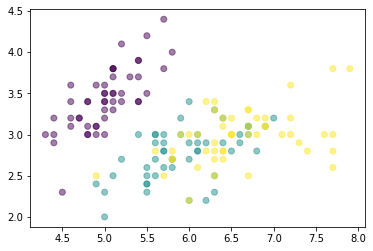

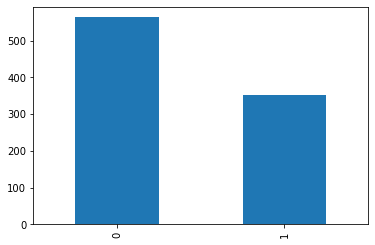

| 38. 학생 점수 분석 || Kmeans (0) | 2021.11.25 |

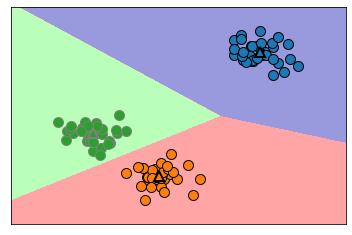

| 37. iris || Kmeans (0) | 2021.11.25 |